As the availability of ChatGPT Search expands, understanding its indexing mechanics will be vital for digital visibility.

While Bing’s index plays a key role, OpenAI’s system surfaces content using its own crawlers and attribution methods.

Here is a breakdown of the technical requirements for ensuring your website is indexed correctly.

Technical Framework

ChatGPT Search combines Bing’s search index with OpenAI’s proprietary technology.

According to OpenAI’s technical documentation, the platform utilizes a fine-tuned version of GPT-4o, enhanced with synthetic data generation techniques and integration with their o1-preview system.

The platform employs three distinct crawlers, each serving different purposes.

The OAI-SearchBot serves as the primary crawler for search functionality, while ChatGPT-User handles real-time user requests and enables direct interaction with external applications.

The third crawler, GPTBot, manages AI model training and can be blocked without affecting search visibility.

Implementation

Proper indexing begins with robots.txt configuration.

Your website’s robots.txt should specifically allow OAI-SearchBot while maintaining separate permissions for different OpenAI crawlers.

In addition to this basic configuration, websites must ensure proper indexing by Bing and maintain a clear site architecture.

It’s worth noting that allowing OAI-SearchBot doesn’t automatically mean the content will be used for AI training.

It can take approximately 24 hours for OpenAI’s systems to adjust to new crawling directives after a site’s robots.txt update.

Content Attribution

ChatGPT Search includes several key features for content publishers:

- Source Attribution: All referenced content includes proper citation

- Source Sidebar: Provides reference links for verification

- Multiple Citation Opportunities: A single query can generate multiple source citations

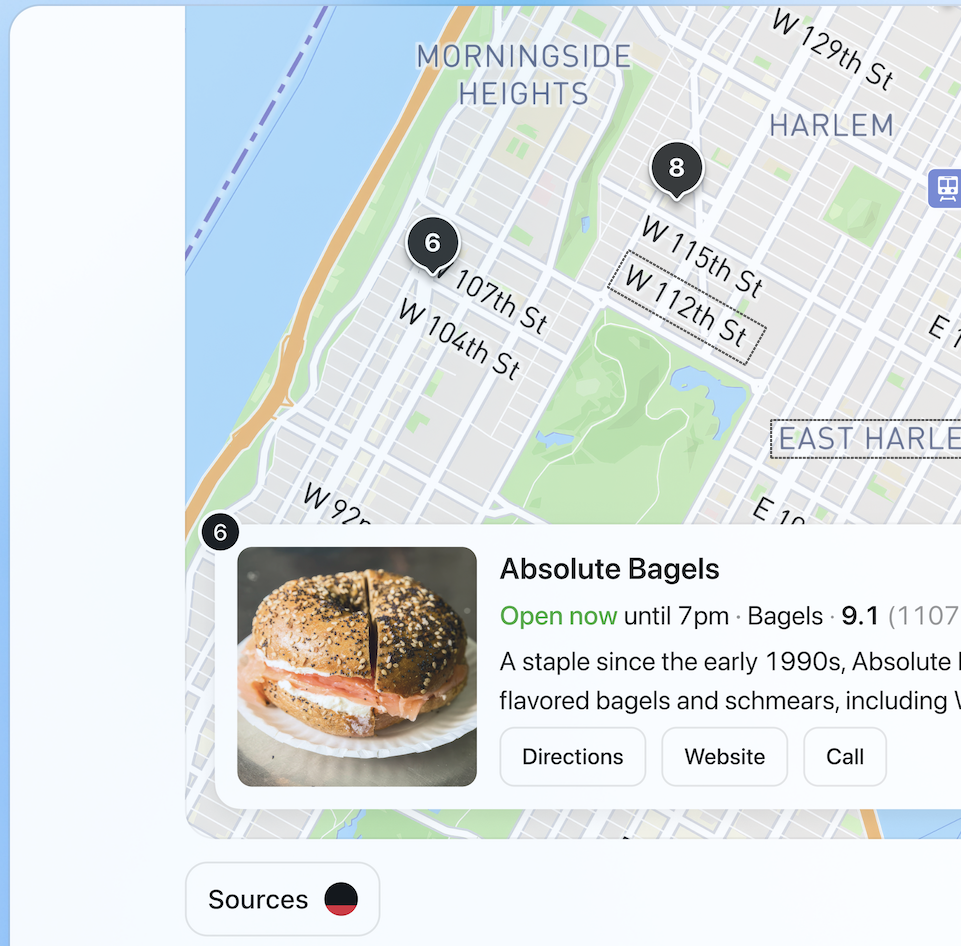

- Locations: Searches for specific locations will return an interactive map, as shown below.

Image Credit: OpenAI

Image Credit: OpenAIAdditional Considerations

Recent testing has revealed several important factors:

- Content freshness affects visibility

- Pages behind paywalls can still be cited

- URLs returning 404 errors may still appear in citations

- Multiple pages from the same domain can be referenced in a single response

Recommendations

Indexing in ChatGPT requires ongoing attention to technical health, including regular verification of the robots.txt file and crawler access.

Publishers should prioritize maintaining factual accuracy and up-to-date information while implementing a clear content structure.

This ensures that pages remain accessible across traditional search engines and AI-powered platforms, helping websites achieve broader visibility.

Featured Image: designkida/Shutterstock