Revelations that OpenAI secretly funded and had access to the FrontierMath benchmarking dataset are raising concerns about whether it was used to train its reasoning o3 AI reasoning model, and the validity of the model’s high scores.

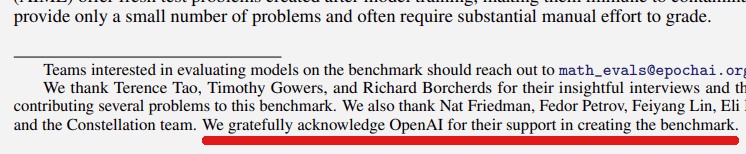

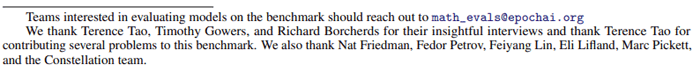

In addition to accessing the benchmarking dataset, OpenAI funded its creation, a fact that was withheld from the mathematicians who contributed to developing FrontierMath. Epoch AI belatedly disclosed OpenAI’s funding only in the final paper published on Arxiv.org, which announced the benchmark. Earlier versions of the paper omitted any mention of OpenAI’s involvement.

Screenshot Of FrontierMath Paper

Closeup Of Acknowledgement

Previous Version Of Paper That Lacked Acknowledgement

OpenAI 03 Model Scored Highly On FrontierMath Benchmark

The news of OpenAI’s secret involvement are raising questions about the high scores achieved by the o3 reasoning AI model and causing disappointment with the FrontierMath project. Epoch AI responded with transparency about what happened and what they’re doing to check if the o3 model was trained with the FrontierMath dataset.

Giving OpenAI access to the dataset was unexpected because the whole point of it is to test AI models but that can’t be done if the models know the questions and answers beforehand.

A post in the r/singularity subreddit expressed this disappointment and cited a document that claimed that the mathematicians didn’t know about OpenAI’s involvement:

“Frontier Math, the recent cutting-edge math benchmark, is funded by OpenAI. OpenAI allegedly has access to the problems and solutions. This is disappointing because the benchmark was sold to the public as a means to evaluate frontier models, with support from renowned mathematicians. In reality, Epoch AI is building datasets for OpenAI. They never disclosed any ties with OpenAI before.”

The Reddit discussion cited a publication that revealed OpenAI’s deeper involvement:

“The mathematicians creating the problems for FrontierMath were not (actively)[2] communicated to about funding from OpenAI.

…Now Epoch AI or OpenAI don’t say publicly that OpenAI has access to the exercises or answers or solutions. I have heard second-hand that OpenAI does have access to exercises and answers and that they use them for validation.”

Tamay Besiroglu (LinkedIn Profile), associated director at Epoch AI, acknowledged that OpenAI had access to the datasets but also asserted that there was a “holdout” dataset that OpenAI didn’t have access to.

He wrote in the cited document:

“Tamay from Epoch AI here.

We made a mistake in not being more transparent about OpenAI’s involvement. We were restricted from disclosing the partnership until around the time o3 launched, and in hindsight we should have negotiated harder for the ability to be transparent to the benchmark contributors as soon as possible. Our contract specifically prevented us from disclosing information about the funding source and the fact that OpenAI has data access to much but not all of the dataset. We own this error and are committed to doing better in the future.

Regarding training usage: We acknowledge that OpenAI does have access to a large fraction of FrontierMath problems and solutions, with the exception of a unseen-by-OpenAI hold-out set that enables us to independently verify model capabilities. However, we have a verbal agreement that these materials will not be used in model training.

OpenAI has also been fully supportive of our decision to maintain a separate, unseen holdout set—an extra safeguard to prevent overfitting and ensure accurate progress measurement. From day one, FrontierMath was conceived and presented as an evaluation tool, and we believe these arrangements reflect that purpose. “

More Facts About OpenAI & FrontierMath Revealed

Elliot Glazer (LinkedIn profile/Reddit profile), the lead mathematician at Epoch AI confirmed that OpenAI has the dataset and that they were allowed to use it to evaluate OpenAI’s o3 large language model, which is their next state of the art AI that’s referred to as a reasoning AI model. He offered his opinion that the high scores obtained by the o3 model are “legit” and that Epoch AI is conducting an independent evaluation to determine whether or not o3 had access to the FrontierMath dataset for training, which could cast the model’s high scores in a different light.

He wrote:

“Epoch’s lead mathematician here. Yes, OAI funded this and has the dataset, which allowed them to evaluate o3 in-house. We haven’t yet independently verified their 25% claim. To do so, we’re currently developing a hold-out dataset and will be able to test their model without them having any prior exposure to these problems.

My personal opinion is that OAI’s score is legit (i.e., they didn’t train on the dataset), and that they have no incentive to lie about internal benchmarking performances. However, we can’t vouch for them until our independent evaluation is complete.”

Glazer had also shared that Epoch AI was going to test o3 using a “holdout” dataset that OpenAI didn’t have access to, saying:

“We’re going to evaluate o3 with OAI having zero prior exposure to the holdout problems. This will be airtight.”

Another post on Reddit by Glazer described how the “holdout set” was created:

“We’ll describe the process more clearly when the holdout set eval is actually done, but we’re choosing the holdout problems at random from a larger set which will be added to FrontierMath. The production process is otherwise identical to how it’s always been.”

Waiting For Answers

That’s where the drama stands until the Epoch AI evaluation is completed which will indicate whether or not OpenAI had trained their AI reasoning model with the dataset or only used it for benchmarking it.

Featured Image by Shutterstock/Antonello Marangi