How does the rapid growth of bots change the open web?

More than half of the web’s traffic comes from bots:

- Imperva’s Bad Bot Report 2024 reveals that almost 50% of total internet traffic wasn’t human in 2024. Trend: declining.

- Cloudflare’s Radar shows ~70% human traffic and 30% from bots.

- Akami reports that 42% of traffic on the web comes from bots.

So far, bots have collected information that makes apps better for humans. But a new species grows in population: agentic bots.

For two decades, we optimized sites for GoogleBot. Soon, we could focus on AI helpers that act as intermediaries between humans and the open web.

We have already optimized for the BotNet with Schema and product feeds in Google’s Merchant Center. XML sitemaps have become table stakes decades ago.

At the next level, we could have separate websites or APIs for agentic bots with a whole new marketing playing field.

Image Credit: Lyna ™

Image Credit: Lyna ™Agentic Web

As we’re getting close to getting more bot than human traffic on the open web, it’s essential to keep in mind that about 65% of bot traffic is estimated to be malicious.

Good bots include scrapers from search engines, SEO tools, security defense, and, of course, AI crawlers. Both types of bots are growing in population, but only the good ones are really useful.

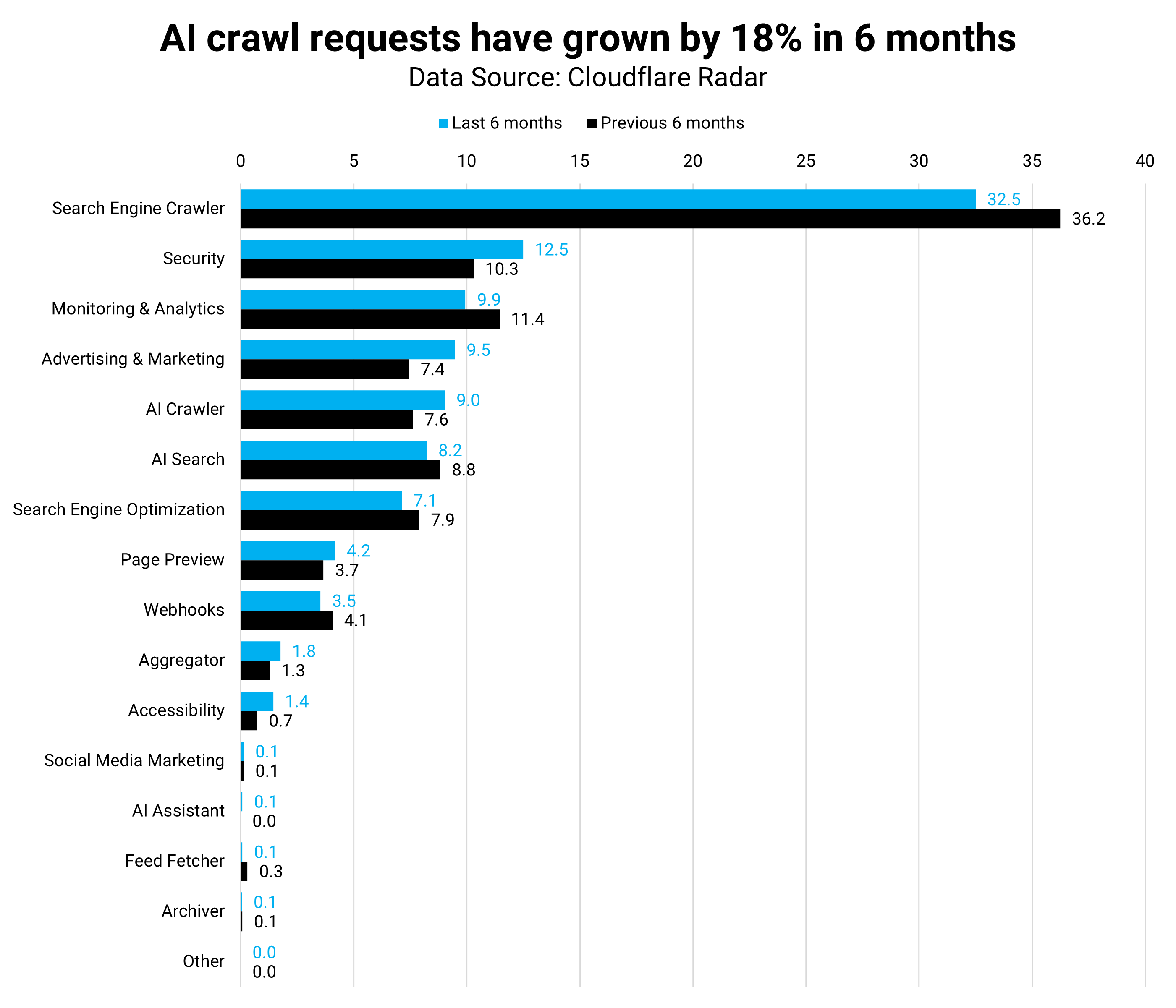

Comparing the last with the previous six months, AI crawlers grew by 18% while search engines slowed down by 10%. GoogleBot specifically reduced activity by -1.6%.

However, Google’s AI crawler made up for it with an increase of 1.4%. GPT Bot was the most active AI crawler, with 3.8% of all requests – and grew 12%.

Google’s AI crawler grew by 62% and was responsible for 3.7% of all bot requests. Given its current growth rate, Google’s AI crawler should soon be the most active on the web.

Image Credit: Kevin Indig

Image Credit: Kevin IndigToday, AI bots have three goals:

- Collect training data.

- Build a search index to ground LLM answers (RAG).

- Collect real-time data for prompts that demand freshness.

But currently, all big AI developers work on agents that browse the web and take action for users:

- Claude was first with its “Computer Use” product feature: “Developers can direct Claude to use computers the way people do—by looking at a screen, moving a cursor, clicking buttons, and typing text.”

- Google’s Jarvis, “a helpful companion that surfs the web for you,” accidentally launched on the Chrome store momentarily.

- OpenAI works on “Operator,” also an agent that takes action for you.

I see three possible outcomes:

- Agents grow bot traffic on the open web significantly as they crawl and visit websites.

- Agents use APIs to get information.

- Agents operate on their platform, meaning Operator uses only data from ChatGPT instead of collecting its own.

I think a mix of all three is most likely, with the result that bot traffic would grow significantly.

If that turns out to be true, companies will increasingly build separate versions of their site for bots geared towards speed and structured data. “Just focus on the user” becomes “just focus on the agents.”

In a way, ChatGPT Search is already an agent that browses and curates the web for humans. But if agents keep their promise, you can expect a lot more.

OpenAI CEO Sam Altman said in his recent Reddit AMA, “I think the thing that will feel like the next giant breakthrough will be agents.”

As bots conquer the web, what are humans doing?

Internet Adoption

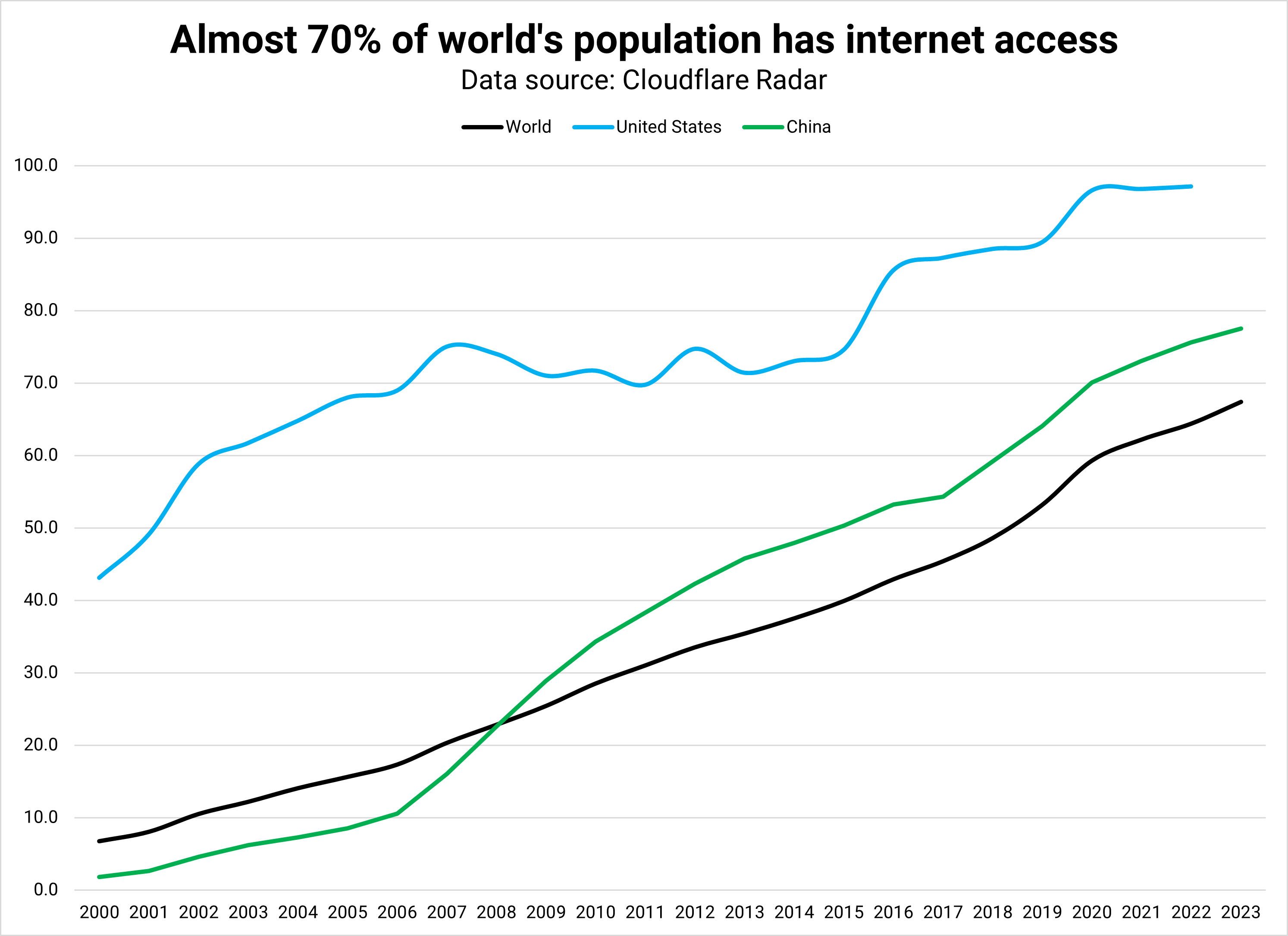

Image Credit: Kevin Indig

Image Credit: Kevin IndigIt’s unlikely that humans stop browsing the web completely.

Even with AI answers in Search and Search features in AI Chatbots, humans still want to verify AI statements, get randomly inspired (serendipity), or seek answers from other humans (Reddit).

But the act of browsing to search will likely dissolve into prompts if bots get good enough.

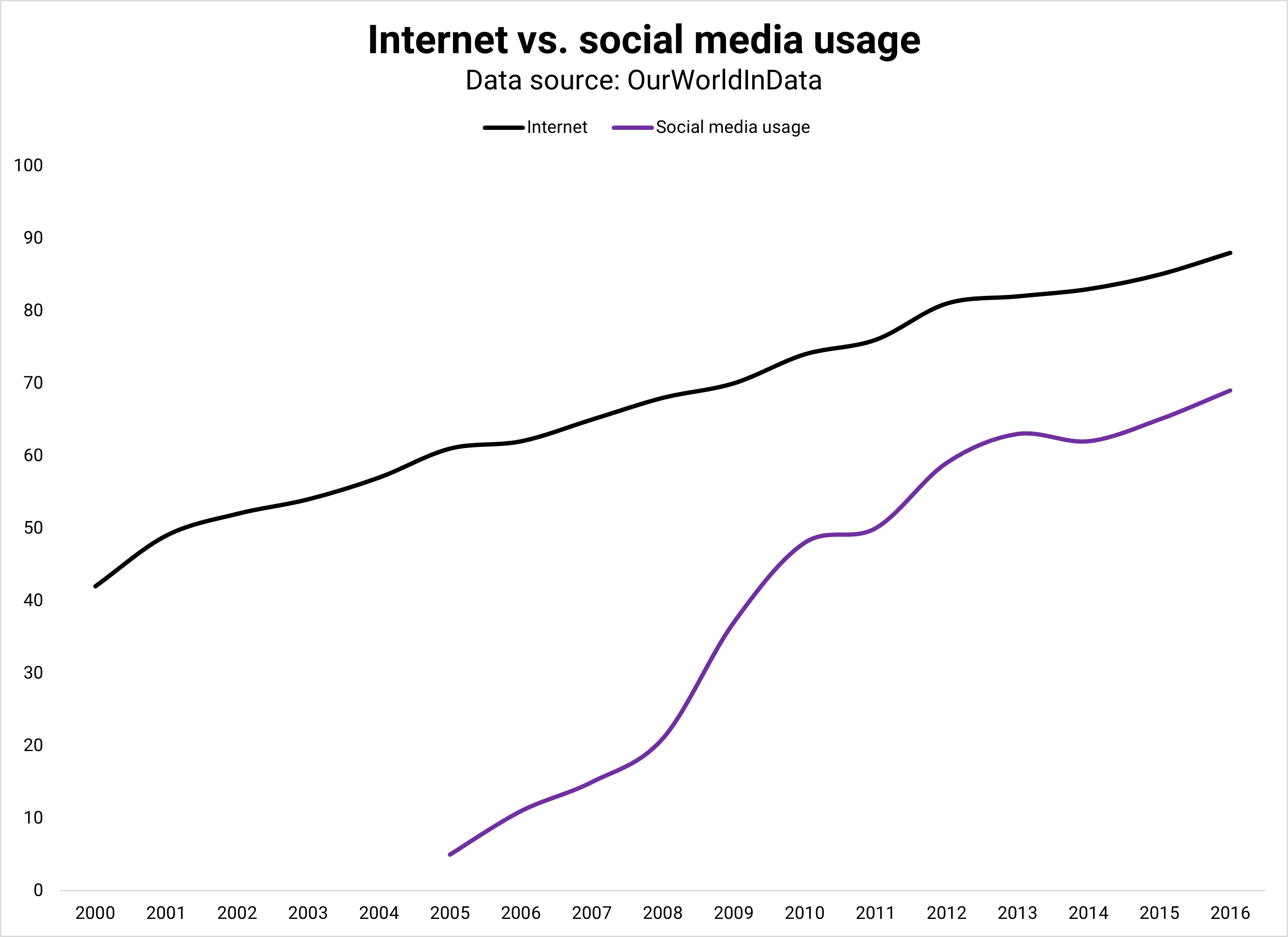

There is room for growth in human traffic: 70% of the world’s population had internet access in 2023. At the current pace (~7% Y/Y), the whole world would have internet access by 2030.

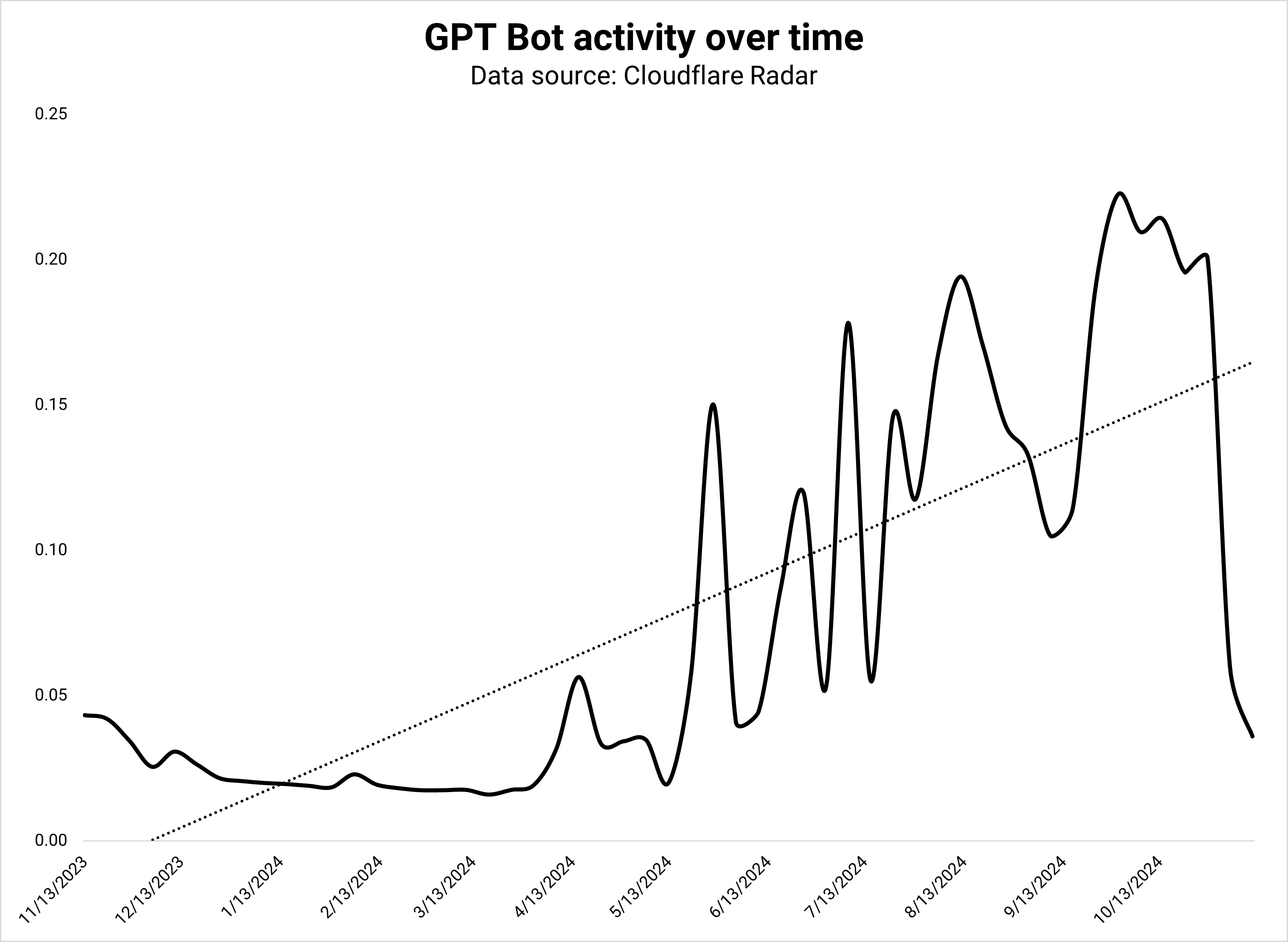

However, despite growing internet adoption, human traffic has been flat across the last three years (see Cloudflare stats). AI crawlers grow much faster (18%), and agents might speed it up even more.

Image Credit: Kevin Indig

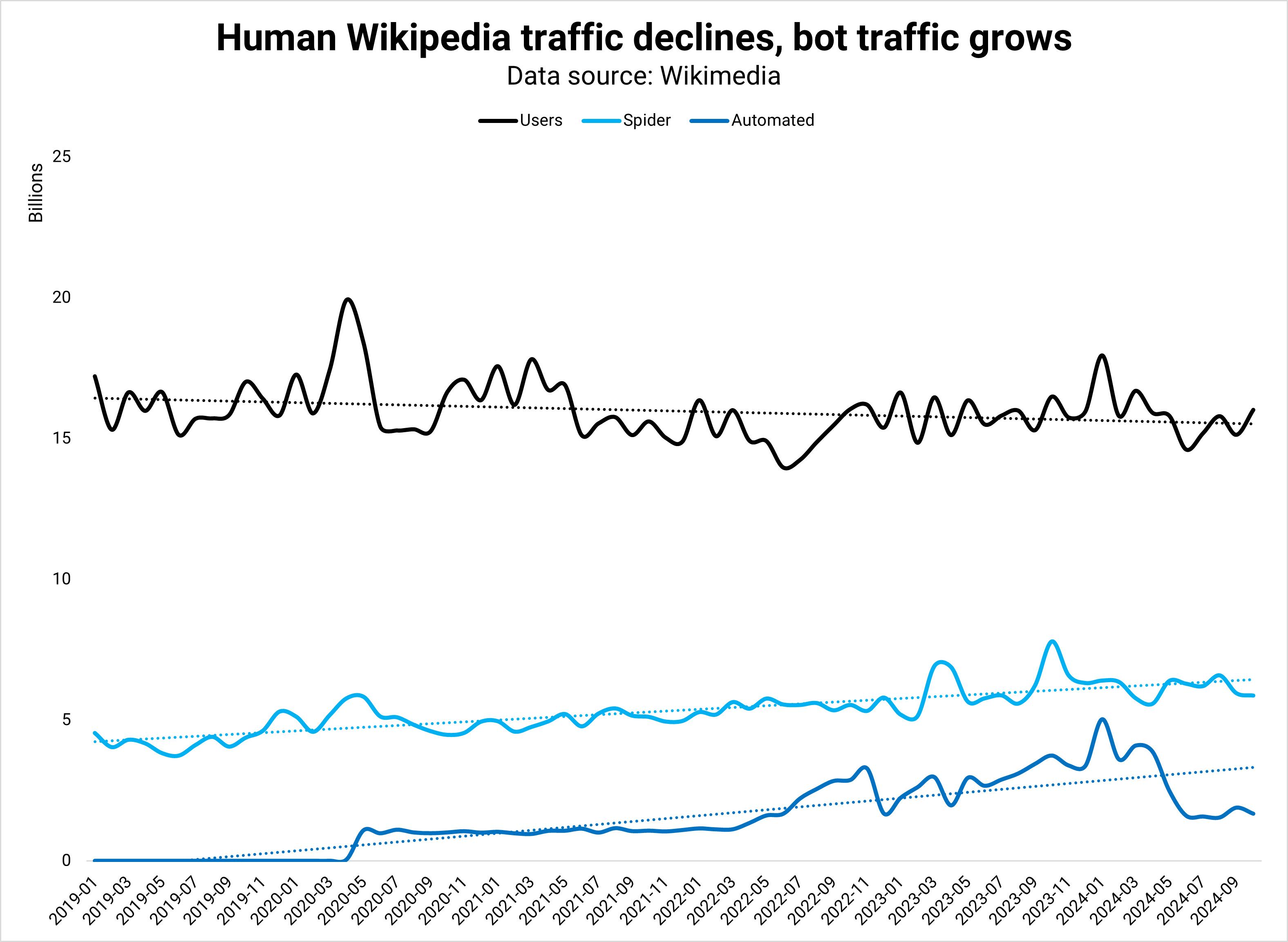

Image Credit: Kevin IndigHuman requests to Wikipedia, the web’s largest site, have stagnated since 2019.

The reason is that human attention shifts to social platforms, especially for younger generations. As the bots take the open web, humans flee to engagement retreats.

Marketing

Image Credit: Kevin Indig

Image Credit: Kevin IndigIn the distant future, good bots could become equal rights citizens of the open web nation as more humans spend time on closed social platforms and agentic LLMs increase the already fast growth of bot traffic. If this theory plays out, what does this mean for marketing and specifically SEO?

Imagine booking a trip. Instead of browsing Google or Booking, you tell your agent where you think about going and when.

Based on what it knows about your preferences, your agent curates three options of flights and hotels from your platform of choice.

When you pick a flight, it’s added to your calendar, and the tickets are in your inbox. You don’t need to checkout. The agent does everything for you. You could apply the same scenario to ecommerce or software.

Given that companies like Google already have the capabilities to build this today, it’s worth thinking about what would remain constant, what would change, and what would become more and less important in this vision.

Constant

Bots don’t need CSS or hero images. There is no downside to cloaking your site for LLM crawlers, so there is a chance that websites show bots a barebones version.

A skill set that remains constant in this future is technical SEO: crawlability, (server) speed, internal linking, and structured data.

Change

An agentic open web offers much better ad targeting capabilities since bots know their owners inside out.

Humans will make purchase decisions a lot faster since their agents give them all the information they need and know their preferences.

Advertising costs drop significantly and offer even higher returns than already today.

Since bots can translate anything in seconds, localization and international sales are no longer a problem. Humans can buy from anyone, anywhere – only constraints by shipping and inventory. The global economy opens up even further.

If we play it right, agents could be the ultimate safe-keepers of privacy: No one has as much data about you as they do, but you can control how much they share.

Agents know you but don’t need to share that information with others. We might share even more data with them, spinning the value flywheel of data → understanding → results → data → value → results → etc.

On the downside, we need to build defenses against rogue bots by redefining what bots are allowed to do in a robots.txt 2.0-like format. Cybersecurity has become even more important, but also more complex since bad bots can mimic good ones so much better.

We’ll need to figure out the environmental impact of more energy consumption by higher bot traffic.

Hopefully, bots will be more efficient and, therefore, cause less total web traffic than humans. This would at least somewhat offset the energy gorge LLMs are already causing.

Important

The most bot-friendly format of information is raw and structured: XML, RSS, and API feeds. We have already sent product feeds and XML sitemaps to Google, but agents will want more.

Web design will be less important in the future, and maybe that’s okay since most sites look very similar anyway.

Feed design becomes more important: what information to include in feeds, how much, how often to update it, and what requests to send back to bots.

Marketers will spend a lot more time reverse-engineering what conversations with chatbots look like. Bots will likely be a black box like Google’s algorithm, but advertising could bring light into what people ask the most.

Relationships

In the agentic future, it’s hard to get customers to switch once customers have settled on a brand they like until they have a bad experience.

As a result, an important marketing lever will be getting customers to try your brand with campaigns like discounts and exclusive offers.

Once you have conviction and signal that your product is better, the quest becomes persuading users to try it out. Obviously, that already works today.

With so many opportunities to advertise and sway users towards a specific product organically before they buy, we have a lot more influence on the purchase.

But, in the future, agents could make those choices for users. Marketers will spend more time on relationship building, building brand awareness, and influencing old-school marketing factors like pricing, distribution (shipping), and differentiation.

Cloudflare Radar: Traffic Trends

Bots Compose 42% of Overall Web Traffic; Nearly Two-Thirds Are Malicious

Cloudflare Radar: Data Explorer

Introducing computer use, a new Claude 3.5 Sonnet, and Claude 3.5 Haiku

Google’s Jarvis AI extension existence leaked on the Chrome store

OpenAI Nears Launch of AI Agent Tool to Automate Tasks for Users

Featured Image: Paulo Bobita/Search Engine Journal