Celebrate the Holidays with some of SEJ’s best articles of 2023.

Our Festive Flashback series runs from December 21 – January 5, featuring daily reads on significant events, fundamentals, actionable strategies, and thought leader opinions.

2023 has been quite eventful in the SEO industry and our contributors produced some outstanding articles to keep pace and reflect these changes.

Catch up on the best reads of 2023 to give you plenty to reflect on as you move into 2024.

Chatbots are taking the world by storm.

SEO pros, writers, agencies, developers, and even teachers are discussing the changes that this technology will cause in society and how we work in our day-to-day lives.

ChatGPT’s release on November 30, 2022 led to a cascade of competition, including Bard and Bing, although the latter runs on OpenAI’s technology.

If you want to search for information, need help fixing bugs in your CSS, or want to create something as simple as a robots.txt file, chatbots may be able to help.

They’re also wonderful for topic ideation, allowing you to draft more interesting emails, newsletters, blog posts, and more.

But which chatbot should you use and learn to master? Which platform provides accurate, concise information?

Let’s find out.

What Is The Difference Between ChatGPT, Google Bard, And Bing Chat?

| ChatGPT | Bard | Bing | |

| Pricing | ChatGPT’s original version remains free to users. ChatGPT Plus is available for $20/month. | Free for users who joined the waitlist and are accepted. | Free for users who are accepted after joining the waitlist. |

| API | Yes, but on a waitlist. | N/A | N/A |

| Developer | OpenAI | Alphabet/Google | OpenAI |

| Technology | GPT-4 | LaMDA | GPT-4 |

| Information Access | Training data with a cutoff date of 2021. The chatbot does state that it has been trained beyond this year, although it won’t include that information. | Real-time access to the data Google collects from search. | Real-time access to Bing’s search data. |

Wait! What Is GPT? What Is LaMDA?

ChatGPT uses GPT technology, and Bard uses LaMDA, meaning they’re different “under the hood.” This is why there’s some backlash against Bard. People expect Bard to be GPT, but that’s not the intent of the product.

Also, although Bing has chosen to collaborate with OpenAI, it uses fine-tuning, which allows it to tune responses for the end user.

Since Bing and Bard are both available on such a wide scale, they have to tune the responses to maintain their brand image and adhere to internal policies that aren’t as restrictive in ChatGPT – at the moment.

GPT: Chat Generative Pre-trained Transformer

GPTs are trained on tons of data using a two-phase concept called “unsupervised pre-training and then fine-tuning.” Imagine consuming billions of data points, and then someone comes along after you gain all of this knowledge to fine-tune it. That’s what is happening behind the scenes when you prompt ChatGPT.

ChatGPT had 175 billion parameters that it has used and learned from, including:

- Articles.

- Books.

- Websites.

- Etc.

While ChatGPT is limited in its datasets, OpenAI has announced a browser plugin that can use real-time data from websites when responding back to you. There are also other neat plugins that amplify the power of the bot.

LaMDA Stands For Language Model For Dialogue Applications

Google’s team decided to follow a LaMDA model for its neural network because it is a more natural way to respond to questions. The goal of the team was to provide conversational responses to queries.

The platform is trained on conversations and human dialog, but it is also apparent that Google uses search data to provide real-time data.

Google uses an Infiniset of data, which are datasets that we really don’t know much about at this point, as Google has kept this information private.

Since these bots are learning from sources worldwide, they also have a tendency to provide false information.

Hallucinations Can Happen

Chatbots can hallucinate, but they’re also very convincing in their responses. It’s important to heed the warning of the developers.

Google tells us:

Screenshot from Google Bard, April 2023

Screenshot from Google Bard, April 2023

Bing also tells us:

Screenshot from Bing Chat, April 2023

Screenshot from Bing Chat, April 2023

If you’re using chatbots for anything that requires facts and studies, be sure to crosscheck your work and verify that the facts and events actually happened.

There have been times when these hallucinations are apparent and other times when non-experts would easily be fooled by the response they receive.

Since chatbots learn from information, such as websites, they’re only as accurate as the information they receive – for now.

With all of these cautions in mind, let’s start prompting each bot to see which provides the best answers.

ChatGPT Vs. Bard Vs. Bing: Prompt Testing And Examples

Since technical SEO is an area I am passionate about, I wanted to see what the chatbots have to say when I put the following prompt in each:

What Are The Top 3 Technical SEO Factors I Can Use To Optimize My Site?

ChatGPT’s Response

Screenshot from ChatGPT, April 2023

Screenshot from ChatGPT, April 2023

ChatGPT provides a coherent, well-structured response to this query. The response does touch on three important areas of optimization:

- Site speed.

- Mobile responsiveness.

- Site architecture.

When prompted to provide more information on site speed, we receive a lot of great information that you can use to begin optimizing your site.

Screenshot from ChatGPT, April 2023

Screenshot from ChatGPT, April 2023

If you’ve ever tried to optimize your site’s speed before, you know just how important all of these factors are for improving your site speed.

ChatGPT mentions browser caching, but what about server-side caching?

When site speed is impacted by slow responses for database queries, server-side caching can store these queries and make the site much faster – beyond a browser cache.

Bard’s Response

Bard’s responses are faster than ChatGPT, and I do like that you can view other “drafts” from Bard if you like. I went with the first draft, which you can see below.

Screenshot from Google Bard, April 2023

Screenshot from Google Bard, April 2023

The information is solid, and I do appreciate that Google uses more formatting and bolds parts of the responses to make them easier to read.

Structured data was a nice addition to the list, and Bard even mentions Schema.org in its response.

To try and keep things similar, I asked Bard, “Can you elaborate on site speed?”

Screenshot from Google Bard, April 2023

Screenshot from Google Bard, April 2023

You can certainly find similarities between ChatGPT’s and Bard’s responses about optimization, but some information is a bit off. For example:

“A caching plugin stores static files on the user’s computer, which can improve load time.”

Caching plugins, often installed on your content management system (CMS), will store files on your server, a content delivery network (CDN), in memory, and so on.

However, the response from Bard indicates that the plugin will store static files on the user’s computer, which isn’t entirely wrong, but it’s odd.

Browsers will cache files automatically on their own, and you can certainly manipulate the cache with a Cache-Control or Expires header.

However, caching plugins can do so much more to improve site speed. I think Bard misses the mark a bit, as well as ChatGPT.

Bing’s Response

Screenshot from Bing Chat, April 2023

Screenshot from Bing Chat, April 2023

Bing is so hard to like because, for years, it has missed the mark in search. Is Chat any better? As an SEO and content creator, I love the fact that Bing provides sources in its responses.

I think for content creators that have relied on traffic from search for so long, citing sources is important. Also, when I want to verify a claim, these citations provide clarity that ChatGPT and Google Bard cannot.

The answers are similar to Bard and GPT, but let’s see what it produces when we ask for it to elaborate a little more:

Screenshot from Bing Chat, April 2023

Screenshot from Bing Chat, April 2023

Bing elaborated less than ChatGPT and Bard, providing just three points in its response. But can you spot the overlap between this response and the one from ChatGPT?

- Bing: You should compress your images and use the correct file format (JPEG for photographs, PNG for graphics).

- ChatGPT: You can compress them, reduce their file sizes, and use the correct file format (e.g., JPEG for photos, PNG for graphics).

The responses are going to be very similar for this type of answer, but neither mentioned using a format like WebP. They both seem to be lacking in this regard. Perhaps there’s just more data for optimizing JPEG and PNG files, but will this change?

This is an interesting concept because what if thousands of articles are created to provide the wrong advice, such as eliminating images completely?

Let’s move on to website caching. Bing’s response is a little more in-depth, explaining what caching can help you achieve, such as a lower time to first byte (TTFB).

Winner: Bing. I thought ChatGPT would win this query, but it turns out Bing provides a little more information on caching and wins out in the “technical” arena. Bard and ChatGPT did provide more solutions for improving your site speed.

Who Is Ludwig Makhyan?

All chatbots knew a little something about technical SEO, but how about me? Let’s see what happens when I ask them about myself:

ChatGPT’s Response

Screenshot from ChatGPT, April 2023

Screenshot from ChatGPT, April 2023

ChatGPT couldn’t find any information about me, which is understandable. I’m not Elon Musk or a famous person, but I did publish a few articles on this very blog you’re reading now before the data cutoff date of ChatGPT.

I have a feeling that Bing and Bard will do a little better for this query.

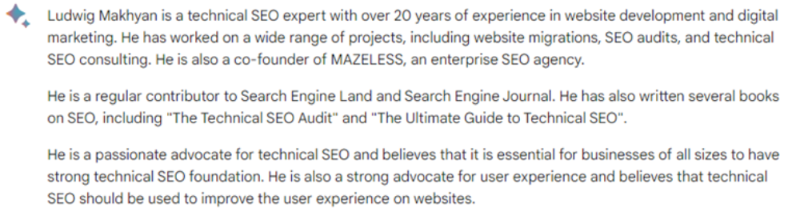

Bard’s Response

Screenshot from Google Bard, April 2023

Screenshot from Google Bard, April 2023

Hmm. The first sentence seems a bit familiar. It came directly from my Search Engine Journal bio, word-for-word. The last sentence in the first paragraph also comes word-for-word from another publication that I write for: “He is the co-founder at MAZELESS, an enterprise SEO agency.”

I’m also not the author of either of these books, although I’ve talked about these topics in great detail before.

Unfortunately, pulling full sentences from sources and providing false information means Bard failed this test. You could argue that there are a few ways to rephrase those sentences, but the response could certainly be better.

Bing’s Response

Screenshot from Bing Chat, April 2023

Screenshot from Bing Chat, April 2023

Bing also took my profile information directly, and most of the other information is the same, too. Bing does provide a much shorter response and links to the sources.

From this data, it seems to me that there needs to be a lot of references for chatbots to work from to define a person. But let’s see what these bots can do with a better prompt that is a bit more advanced.

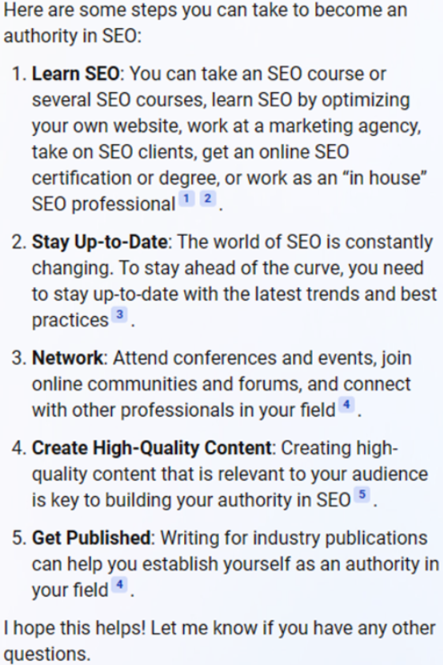

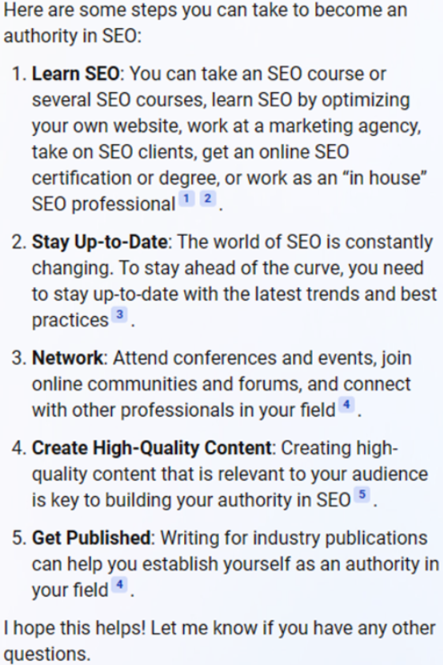

Advanced Prompt: I Want To Become An Authority In SEO. What Steps Should I Take To Reach This Goal?

Up until this point, the prompts have been a bit easy. Let’s find out how each chatbot performs when we use more advanced prompts:

ChatGPT’s Response

Screenshot from ChatGPT, April 2023

Screenshot from ChatGPT, April 2023

Bard’s Response

Screenshot from Google Bard, April 2023

Screenshot from Google Bard, April 2023

Bing’s Response

Screenshot from Bing Chat, April 2023

Screenshot from Bing Chat, April 2023

ChatGPT provides me with more “light bulb” moments, explaining that I should learn things like keyword research, on-page optimization, and link building.

Knowledge seemed to be the core of the recommendations from ChatGPT, but it would have been nice if it mentioned anything about getting published.

Overall, these tips are very similar, but ChatGPT was my favorite. Let’s try putting these chatbots to work on some tasks that I’m sure they can perform.

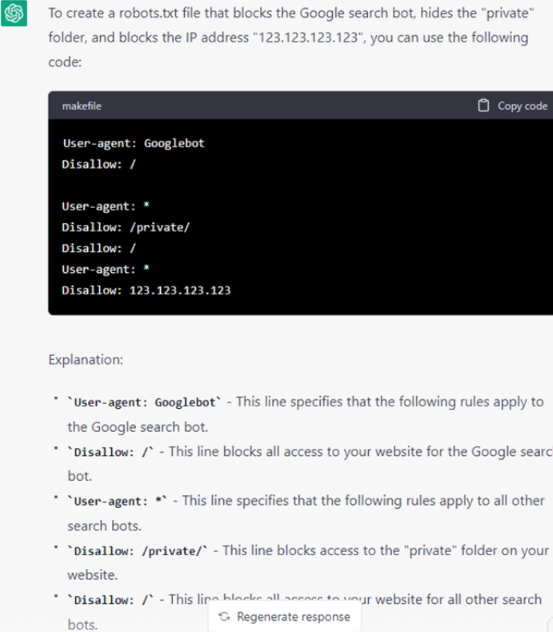

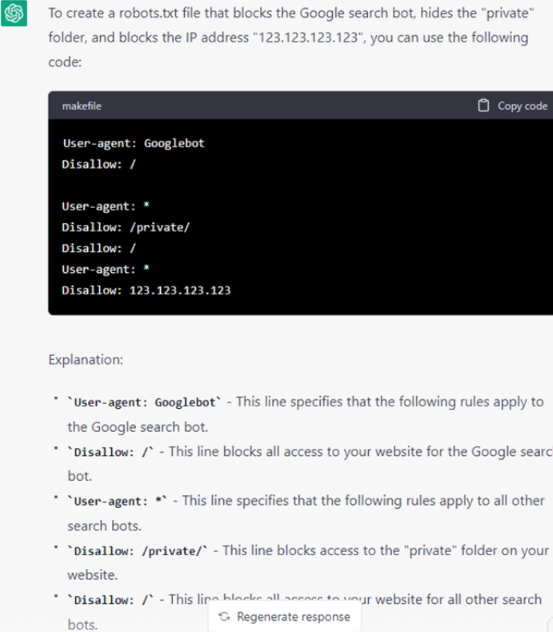

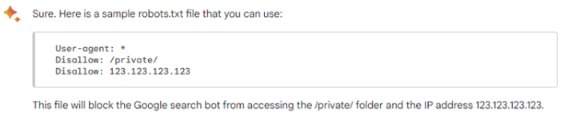

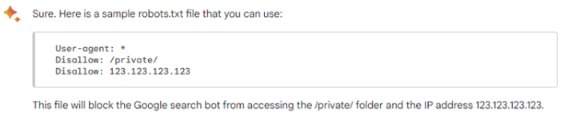

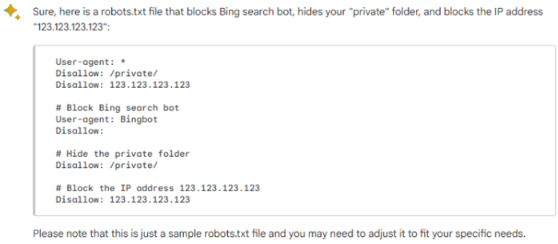

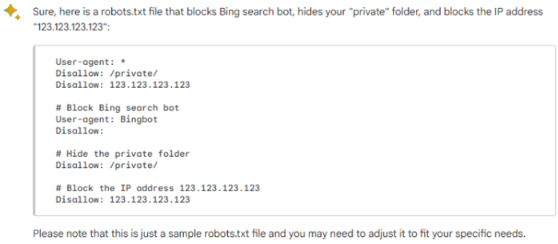

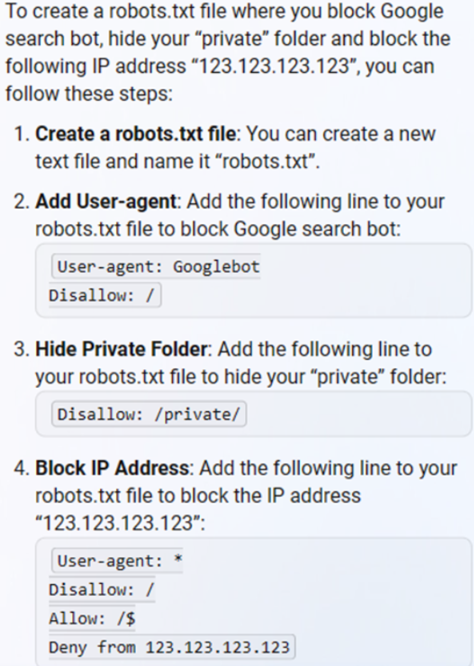

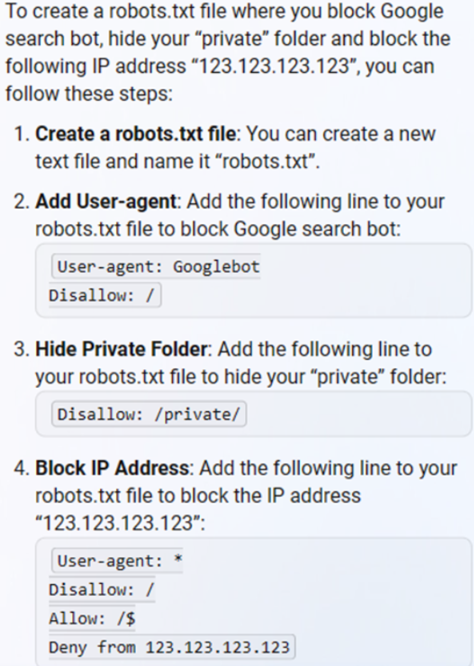

Advanced Prompt: Create A Robots.txt File Where I Block Google Search Bot, Hide My “Private” Folder, And Block The Following IP Address “123.123.123.123”

ChatGPT’s Response

Screenshot from ChatGPT, April 2023

Screenshot from ChatGPT, April 2023

ChatGPT listened to my directions, reiterated them to me, showed me a makefile for the robots.txt, and then explained the parameters to use. I’m impressed.

Bard’s Response

Screenshot from Google Bard April 2023

Screenshot from Google Bard April 2023

Google! Are you assuming that you’re the only search bot in the world because you’re blocking everyone? Unfortunately, Bard uses the “*” as an agent, meaning every search engine is blocked from going to my site – not just Google.

Interestingly, when I repeated the question to block Bing on a fresh chat, it provided the same answer. But when I asked the question a second time in a row, it provided a much better answer with some comments:

Screenshot from Google Bard, April 2023

Screenshot from Google Bard, April 2023

Bing’s Response

Screenshot from Bing Chat, April 2023

Screenshot from Bing Chat, April 2023

Bing tries hard, and I appreciate the explanation that it provides. However, it’s a bit strange. We’re disallowing all bots using “/” and then allowing using “/$,” which allows them to crawl the homepage and nothing else and then denying a certain IP address.

ChatGPT wins this test because it provides a clean and easy way to make your robots.txt file. The other two examples need some fine-tuning and will have undesired consequences if you simply copy and paste them into your robots.txt file.

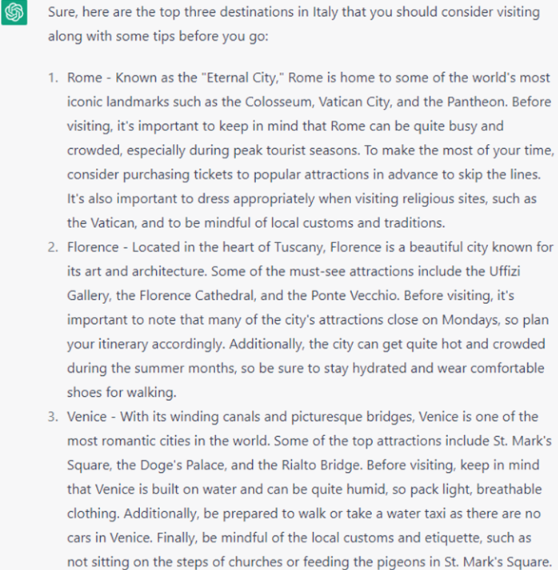

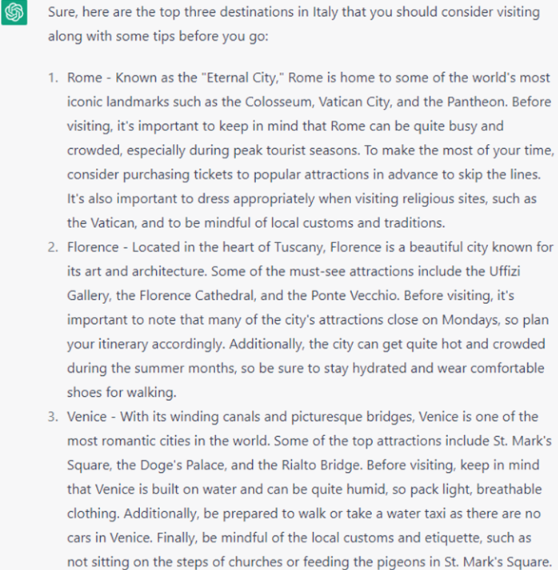

Advanced Prompt: What Are The Top 3 Destinations In Italy To Visit, And What Should I Know Before Visiting Them?

ChatGPT’s Response

Screenshot from ChatGPT, April 2023

Screenshot from ChatGPT, April 2023

ChatGPT does a nice job with its recommended places and provides useful tips for each that are on the same point. I also like how “St. Mark’s Square” was used, showing the bot being able to discern that “Piazza San Marco” is called “St. Mark’s Square” in English.

As a follow-up question, I asked what sunglasses to wear in Italy during my trip, and the response was:

Screenshot from ChatGPT, April 2023

Screenshot from ChatGPT, April 2023

This was a long shot, as the AI doesn’t know my facial shape, likes and dislikes, or interests in fashion. But it did recommend some of the popular eyewear, like the world-famous Ray-Ban Aviators.

Bard’s Response

Screenshot from Google Bard, April 2023

Screenshot from Google Bard, April 2023

Bard did really well here, and I actually like the recommendations that it provides.

Reading this, I know that Rome is crowded and expensive, and if I want to learn about Italian art, I can go to the Uffizi Gallery when I’m in Florence.

Just out of curiosity, I looked at the second draft from Bard, and it was even better than the first.

Screenshot from Google Bard, April 2023

Screenshot from Google Bard, April 2023

This is the “things to know” section, which is certainly more insightful than the first response. I learned that the cities are walkable, public transport is available, and pickpocketing is a problem (I was waiting for this to be mentioned).

The third draft was much like the first, but I’m learning something about Bard throughout all of this.

Bard seems to have answers with great insights, but it’s not always the first draft or response that the bot gives. If Google corrects this issue, it might provide even better answers than Bing and ChatGPT.

When I asked about sunglasses to wear, it came up with similar answers as ChatGPT, but even more specific models. Again Bard doesn’t know much about me personally:

Screenshot from Google Bard, April 2023

Screenshot from Google Bard, April 2023

Bing’s Response

Screenshot from Bing Chat, April 2023

Screenshot from Bing Chat, April 2023

Bing did very well with its response, but it’s curious that it says, “According to 1,” because it would be much nicer to put the site or publication’s name in the place of the number one. The responses are all accurate, albeit very short.

Bard wins this query because it provides more in-depth, meaningful answers. The bot even recommended some very good places to visit in each area, which Bing failed to do. ChatGPT did do well here, too, but the win goes to Bard.

And for the sunglasses query, you be the judge. Some of the recommendations in the list may be out of range for many travelers:

Screenshot from Bing Chat, April 2023

Screenshot from Bing Chat, April 2023

But I did notice the same Aviator sunglasses in the summary.

Which Chatbot Is Better At This Stage?

Each tool has its own strengths and weaknesses.

It’s clear that Bard lacks in its initial response, although it’s quick and provides decent answers. Bard has a nice UI, and I believe it has the answers. But I also think it has some “brain fog,” or should we call it “bit fog?”

Bing’s sources are a nice touch and something I hope all of these chatbots eventually incorporate.

The platform is nice to use, but I’m hearing ads are being integrated into it, which will be interesting. Will ads take priority in chat? For example, if I asked my last question about Italy, would ads:

- Gain priority in what information is displayed?

- Cause misinformation? For example, would the top pizza place be paid ad from a place with horrible reviews instead of the top-rated pizzeria?

ChatGPT, Bard, and Bing are all interesting tools, but what does the future hold for publishers and users? That’s something I cannot answer. No one can yet.

And There’s Also The Major Question: Is AI “Out Of Control?”

Elon Musk, Steve Wozniak, and over a thousand other leaders in tech, AI, ethics, and more are calling for a six-month pause on AI beyond GPT-4.

The pause is not to hinder progress but to allow time to understand the “profound risks to society and humanity.”

These leaders are asking for time to develop and implement measures to ensure that AI tools are safe and are asking governments to create a moratorium to address the issues.

What are your thoughts on these AI tools? Should we pause anything beyond GPT-4 until new measures are in place?

More Resources:

Featured Image: Legendary4/Shutterstock