Former Google CEO Eric Schmidt said that the trajectory of AI is both “enticing” and “frightening.” He emphasized that AI is not just an evolution of technology, it’s about shaping the future of humanity. His comments reflect how the highest levels of technology leaders think about AI and carry implications for how this will play out for SEO.

Tech Companies Shouldn’t Be Making The Decisions

Asked if the decisions about the future of technology should be left to people like him, Eric Schmidt responded no. He cited Henry Kissinger who ten years ago said that people like Schmidt should not be making the decisions and used the example of social media to explain why.

“Let’s look at social media. We’ve now arrived at a situation where we have these huge companies in which I was part of. And they all have this huge positive implication for entertainment and culture, but they have significant negative implications in terms of tribalism, misinformation, individual harm, especially against young people, and especially against young women.

None of us foresaw that. Maybe if we’d had some non-technical people doing this with us, we would have foreseen the impact on society. I don’t want us to make that mistake again with a much more powerful tool.”

AI Is Both Frightening & Enticing

Eric Schmidt has been an active participant in the development of computer technology since 1975 to the present. The awe he expresses for the point in time we are in now is something that everyone at every level of search marketing, from publishing, SEO, advertising to ecommerce should be aware of. The precipice we find ourselves at right now should not be underestimated and at this point it barely seems possible to overestimate it.

Given that Sundar Pichai, Google’s current CEO, stated that search will be changing in profound ways in 2025 and the revelation that Google Gemini 2.0 will play a role in powering AI search, Schmidt’s declarations about the mind-boggling scale of computing capabilities should be of high importance to search marketers in both the enticing capabilities for them and frightening realities of what Google will be doing.

Schmidt observed:

“There are two really big things happening right now in our industry. One is the the development of what are called agents, where agents can do something. So you can say I want to build a house so you find the architect, go through the land use, buy the houses. Can all be done by computer not just by humans.

And then the other thing is the ability for the computer to write code. So if I say to you I wanted sort of study the audience for this show and I want you to figure out how to make a variant of my show for each and every person who’s watching it. The computer can do that. That’s how powerful the programming capabilities of AI are.

In my case, I’ve managed programmers my whole life and they typically don’t do what I want. You know, they do whatever they want.

With a computer, it’ll do exactly what you say. And the gains in computer programming from the AI systems are frightening, they’re both enticing because they will change the slope.

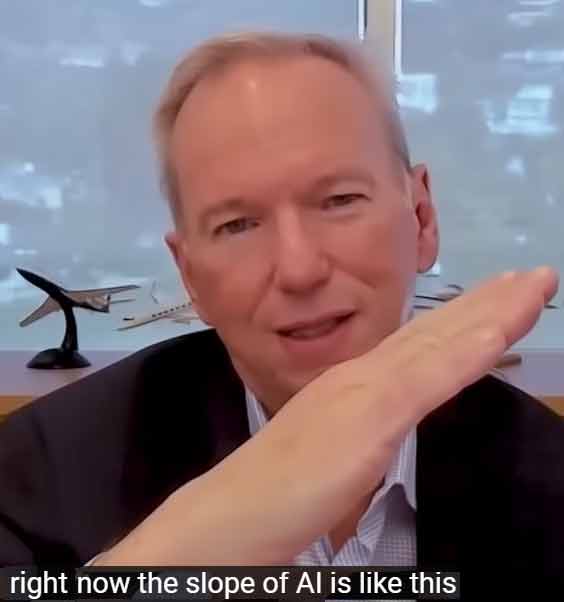

Right now, the slope of AI is like this…”

Screenshot Of Schmidt Illustrating The Slope Of AI

He continued his answer:

“…and when you have AI scientists, that is computers developing AI, the slope will go this… it will go wham! But that development puts an awful lot of power in the hands of an awful lot of people.”

Screenshot Of Eric Schmidt Illustrating The Future AI Slope

Embedding The Intrinsic Goodness Of Humanity In AI

The interview ended with a question and answer around the possibility of embedding positive human values and ethical principles into AI systems during their development.

There are some people who complain about the ethical guardrails placed on AI, claiming that the guardrails are based on political or ideological values, reflecting the tension between those who feel entitled to the freedom to use AI to whatever ends they desire and those who fear that AI may be used for evil purposes.

Eric Schmidt addresses this tension by saying that machines can be embedded with the best of human goodness.

The interviewer noted that Schmidt, in his book, expressed confidence that machines will reflect “the intrinsic goodness in humanity” and asked whether humanity can truly be considered inherently good, especially when some people clearly aren’t.

Schmidt acknowledged that there is a certain percentage of people who are evil. But he also expressed that in general people tend to be good and that humans can put ethical rules into AI machines.

He explained:

“The good news is the vast majority of humans on the planet are well meaning, they’re social creatures. They want themselves to do well and they want their neighbors and especially their tribe, to do well.

I see no reason to think that we can’t put those rules into the computers.

One of the tech companies started its training of its model by putting in the Constitution and the Constitution was embedded inside of the model of how you treat things.

Now, of course, we can disagree on what the Constitution is. But these systems are under our control.

There are humans who are making decisions to train them, and furthermore, the systems that you use, whether it’s ChatGPT or Gemini or or Claude or what have you, have all been carefully examined after they were produced to make sure they don’t have any really horrific rough edges.

So humans are directly involved in the creation of these models, and they have a responsibility to make sure that nothing horrendous occurs as a result of them.”

That statement seems to presume that people like him shouldn’t be making the decisions but that they should be made with consultation with outsiders, as he said at the beginning of the interview. Nevertheless, the decisions are always made by corporations.

See also: Google Proposes A Globalist Approach To AI Regulation

People Mean Well But Corporations Answer To Profits

The question that wasn’t asked is that with a few exceptions (like the outdoor clothing company Patagonia), considering that corporations generally aren’t motivated by “human goodness” or base their decisions on ethics, can they be trusted to imbue machines with human goodness?

Despite click bait articles to the contrary, Google still publishes their “don’t be evil” motto on their Code Of Conduct page, they simply moved it to the bottom of the page. Nevertheless, Google’s corporate decisions, including about search, are strongly based on profit.

On the issue of whether AI Search is strip mining Internet websites out of existence, Sundar Pichai, the current Google CEO, struggled to say what Google does to preserve the web ecosystem. That’s the outcome of a system that prioritizes profits.

Is that evil, or is it just the banality of a corporate system that prioritizes profit over everything else, leading to harmful outcomes? What does that say about the future of AI Search and the web ecosystem?

Screenshot of Google’s De-Prioritized Don’t Be Evil Motto

Watch The Interview With Eric Schmidt:

[embedded content]

Featured Image by Shutterstock/Frederic Legrand – COMEO