A vector database is a collection of data where each piece of data is stored as a (numerical) vector. A vector represents an object or entity, such as an image, person, place etc. in the abstract N-dimensional space.

Vectors, as explained in the previous chapter, are crucial for identifying how entities are related and can be used to find their semantic similarity. This can be applied in several ways for SEO – such as grouping similar keywords or content (using kNN).

In this article, we are going to learn a few ways to apply AI to SEO, including finding semantically similar content for internal linking. This can help you refine your content strategy in an era where search engines increasingly rely on LLMs.

You can also read a previous article in this series about how to find keyword cannibalization using OpenAI’s text embeddings.

Let’s dive in here to start building the basis of our tool.

Understanding Vector Databases

If you have thousands of articles and want to find the closest semantic similarity for your target query, you can’t create vector embeddings for all of them on the fly to compare, as it is highly inefficient.

For that to happen, we would need to generate vector embeddings only once and keep them in a database we can query and find the closest match article.

And that is what vector databases do: They are special types of databases that store embeddings (vectors).

When you query the database, unlike traditional databases, they perform cosine similarity match and return vectors (in this case articles) closest to another vector (in this case a keyword phrase) being queried.

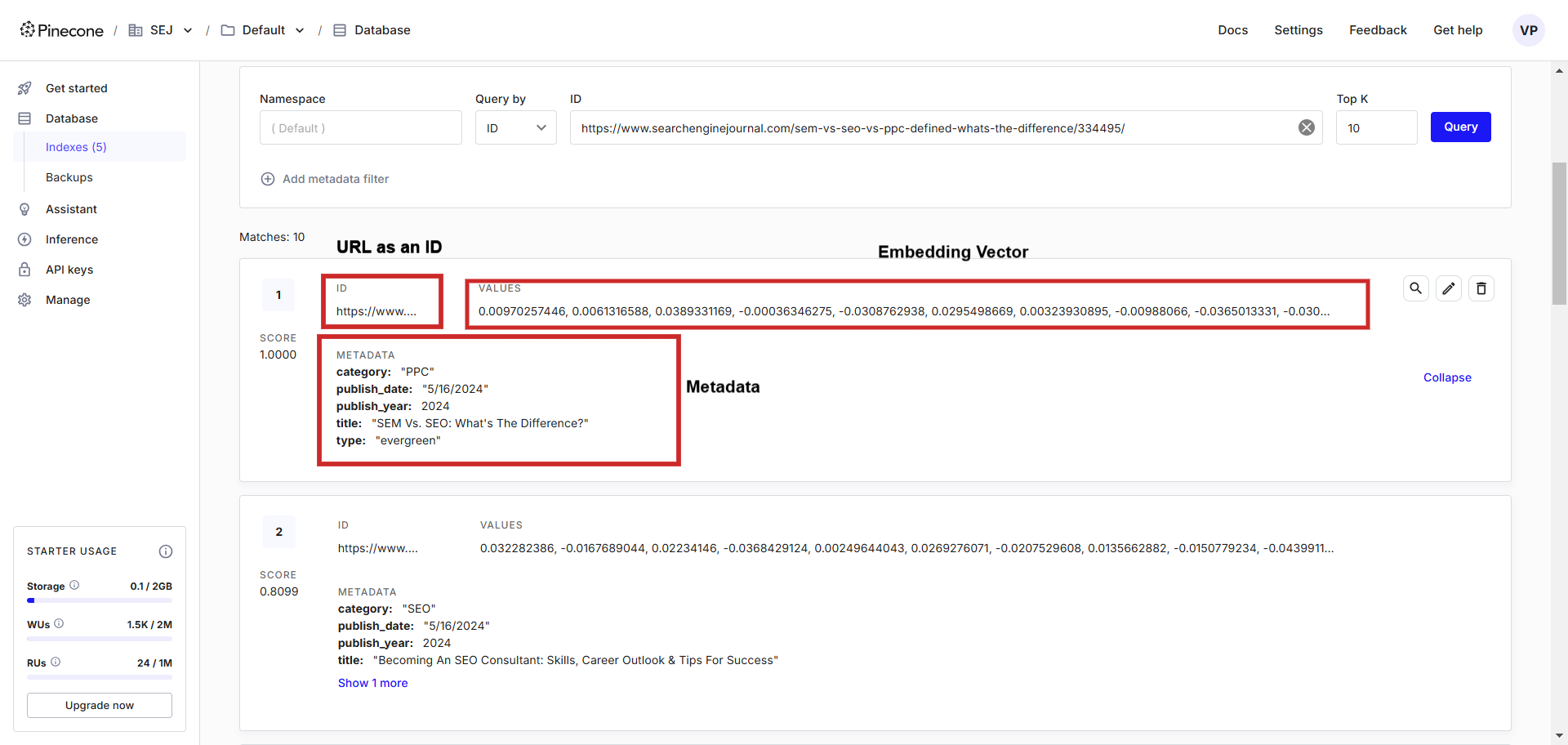

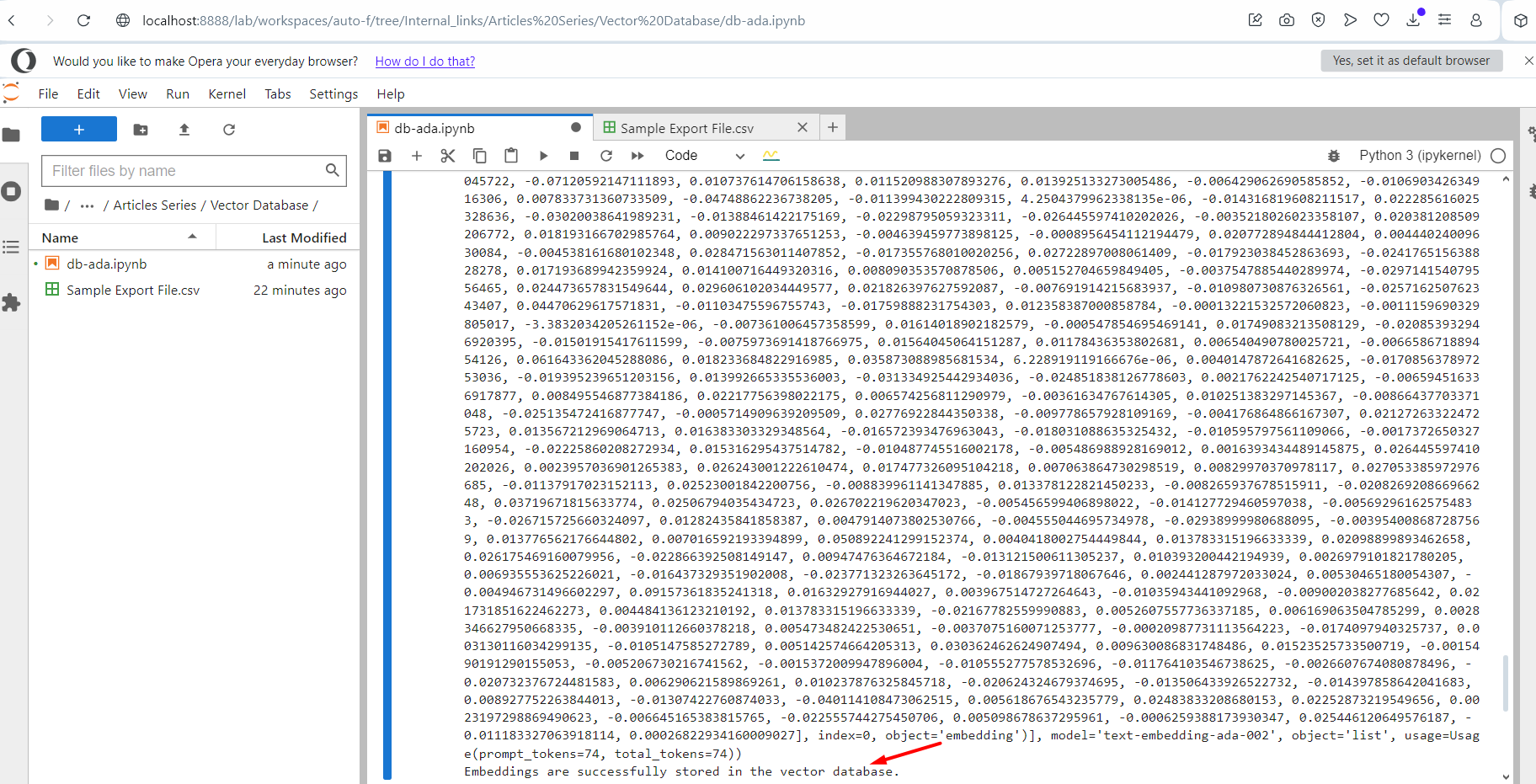

Here is what it looks like:

Text embedding record example in the vector database.

Text embedding record example in the vector database.In the vector database, you can see vectors alongside metadata stored, which we can easily query using a programming language of our choice.

In this article, we will be using Pinecone due to its ease of understanding and simplicity of use, but there are other providers such as Chroma, BigQuery, or Qdrant you may want to check out.

Let’s dive in.

1. Create A Vector Database

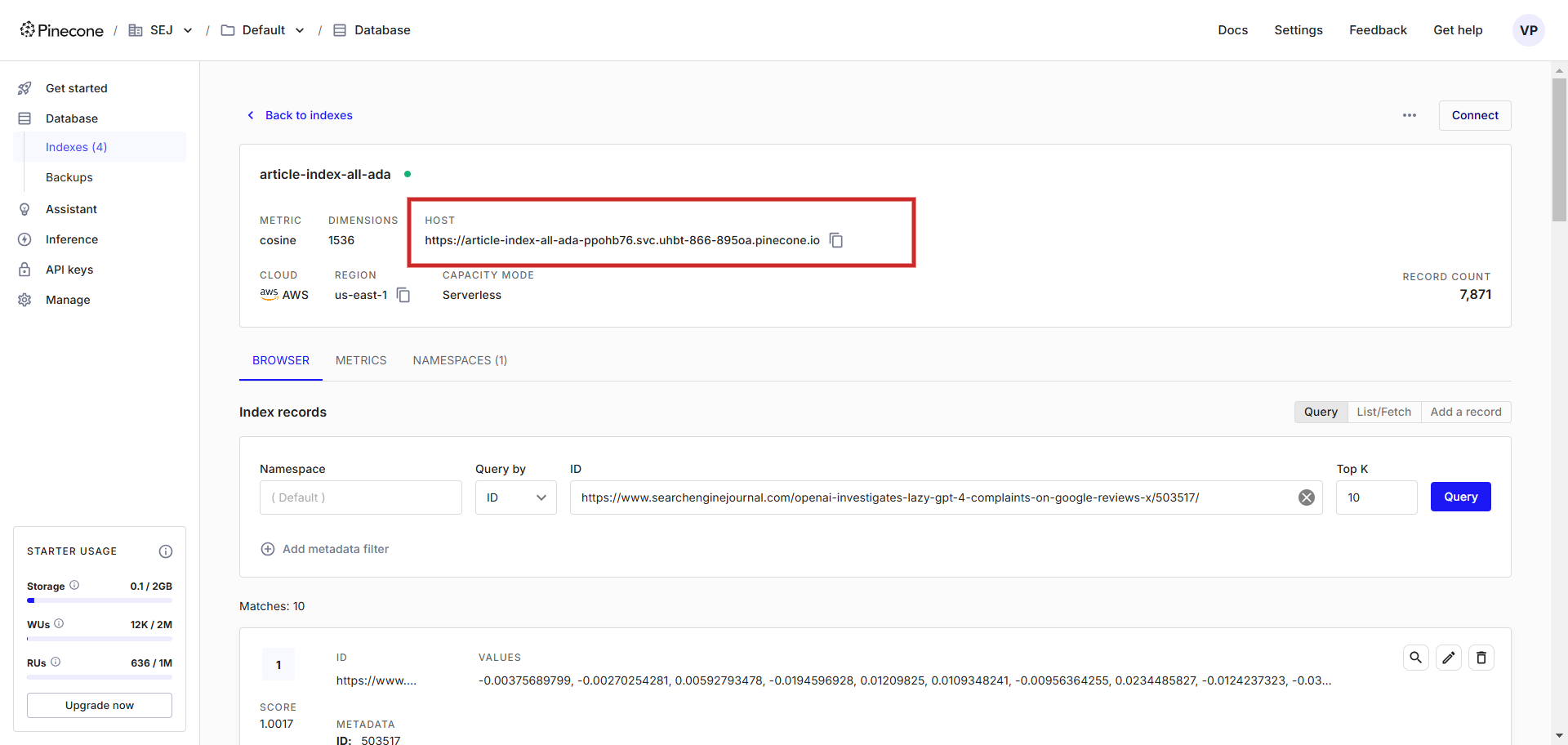

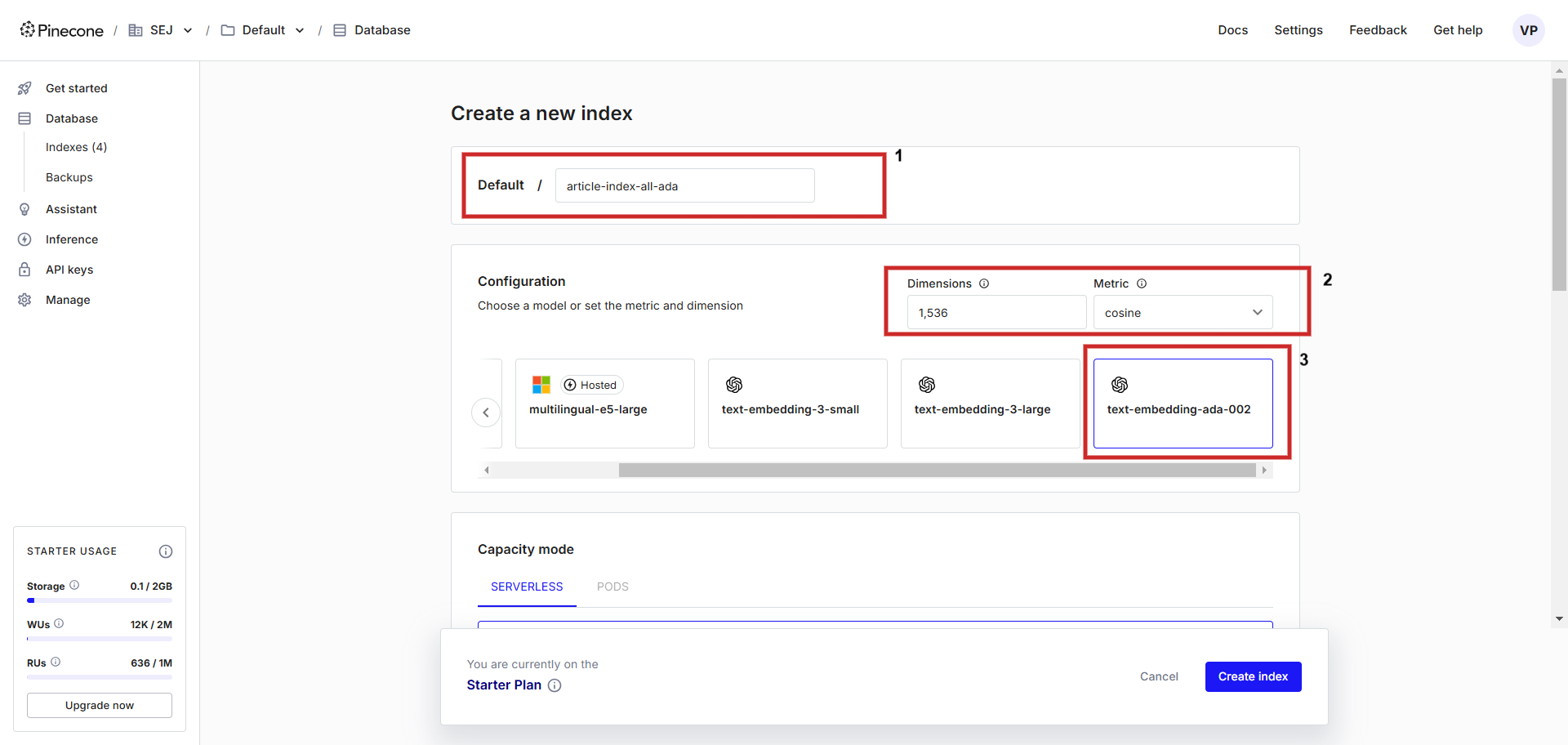

First, register an account at Pinecone and create an index with a configuration of “text-embedding-ada-002” with ‘cosine’ as a metric to measure vector distance. You can name the index anything, we will name itarticle-index-all-ada‘.

Creating a vector database.

Creating a vector database.This helper UI is only for assisting you during the setup, in case you want to store Vertex AI vector embedding you need to set ‘dimensions’ to 768 in the config screen manually to match default dimensionality and you can store Vertex AI text vectors (you can set dimension value anything from 1 to 768 to save memory).

In this article we will learn how to use OpenAi’s ‘text-embedding-ada-002’ and Google’s Vertex AI ‘text-embedding-005’ models.

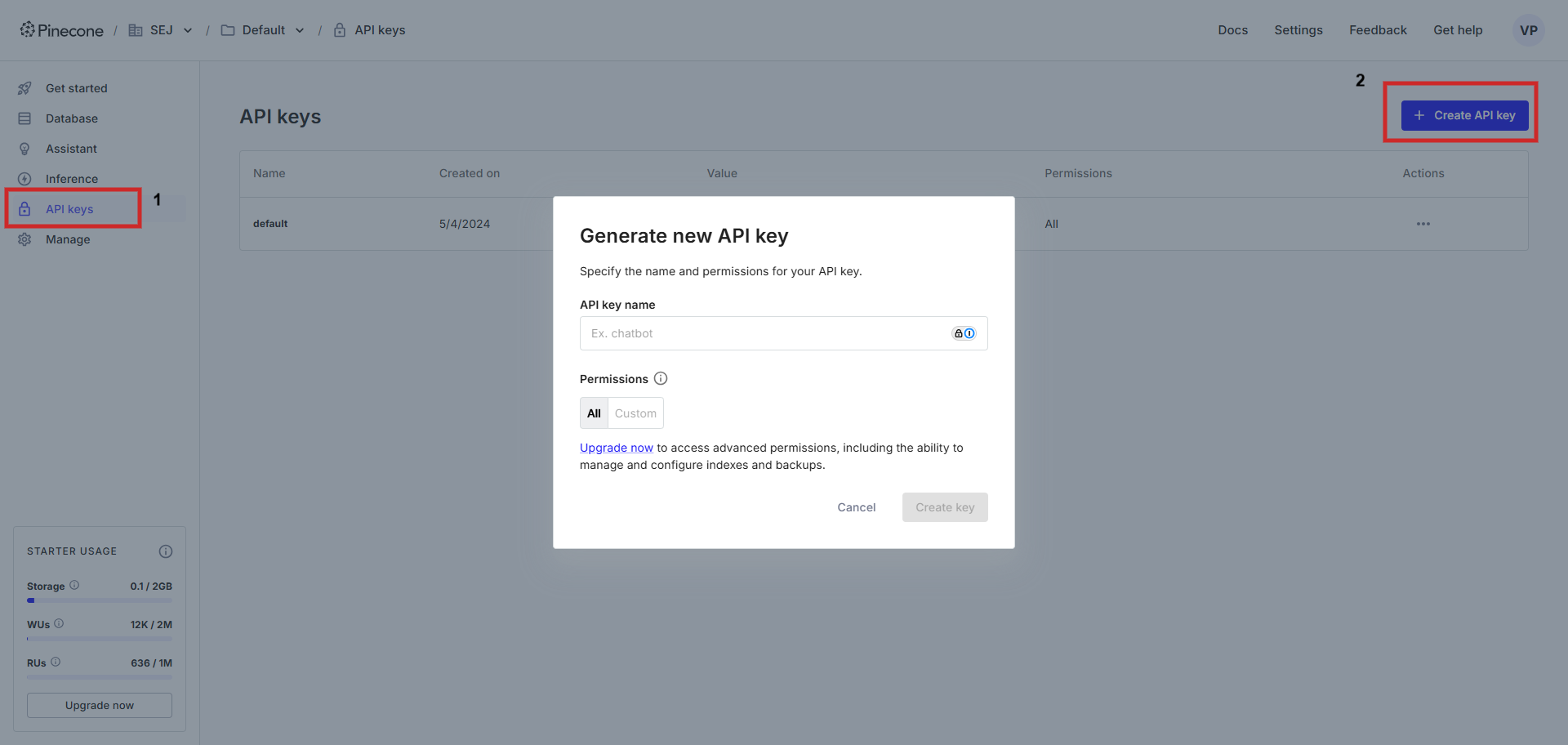

Once created, we need an API key to be able to connect to the database using a host URL of the vector database.

Next, you will need to use Jupyter Notebook. If you don’t have it installed, follow this guide to install it and run this command (below) afterward in your PC’s terminal to install all necessary packages.

pip install openai google-cloud-aiplatform google-auth pandas pinecone-client tabulate ipython numpyAnd remember ChatGPT is very useful when you encounter issues during coding!

2. Export Your Articles From Your CMS

Next, we need to prepare a CSV export file of articles from your CMS. If you use WordPress, you can use a plugin to do customized exports.

As our ultimate goal is to build an internal linking tool, we need to decide which data should be pushed to the vector database as metadata. Essentially, metadata-based filtering acts as an additional layer of retrieval guidance, aligning it with the general RAG framework by incorporating external knowledge, which will help to improve retrieval quality.

For instance, if we are editing an article on “PPC” and want to insert a link to the phrase “Keyword Research,” we can specify in our tool that “Category=PPC.” This will allow the tool to query only articles within the “PPC” category, ensuring accurate and contextually relevant linking, or we may want to link to the phrase “most recent google update” and limit the match only to news articles by using ‘Type’ and published this year.

In our case, we will be exporting:

- Title.

- Category.

- Type.

- Publish Date.

- Publish Year.

- Permalink.

- Meta Description.

- Content.

To help return the best results, we would concatenate the title and meta descriptions fields as they are the best representation of the article that we can vectorize and ideal for embedding and internal linking purposes.

Using the full article content for embeddings may reduce precision and dilute the relevance of the vectors.

This happens because a single large embedding tries to represent multiple topics covered in the article at once, leading to a less focused and relevant representation. Chunking strategies (splitting the article by natural headings or semantically meaningful segments) need to be applied, but these are not the focus of this article.

Here’s the sample export file you can download and use for our code sample below.

2. Inserting OpenAi’s Text Embeddings Into The Vector Database

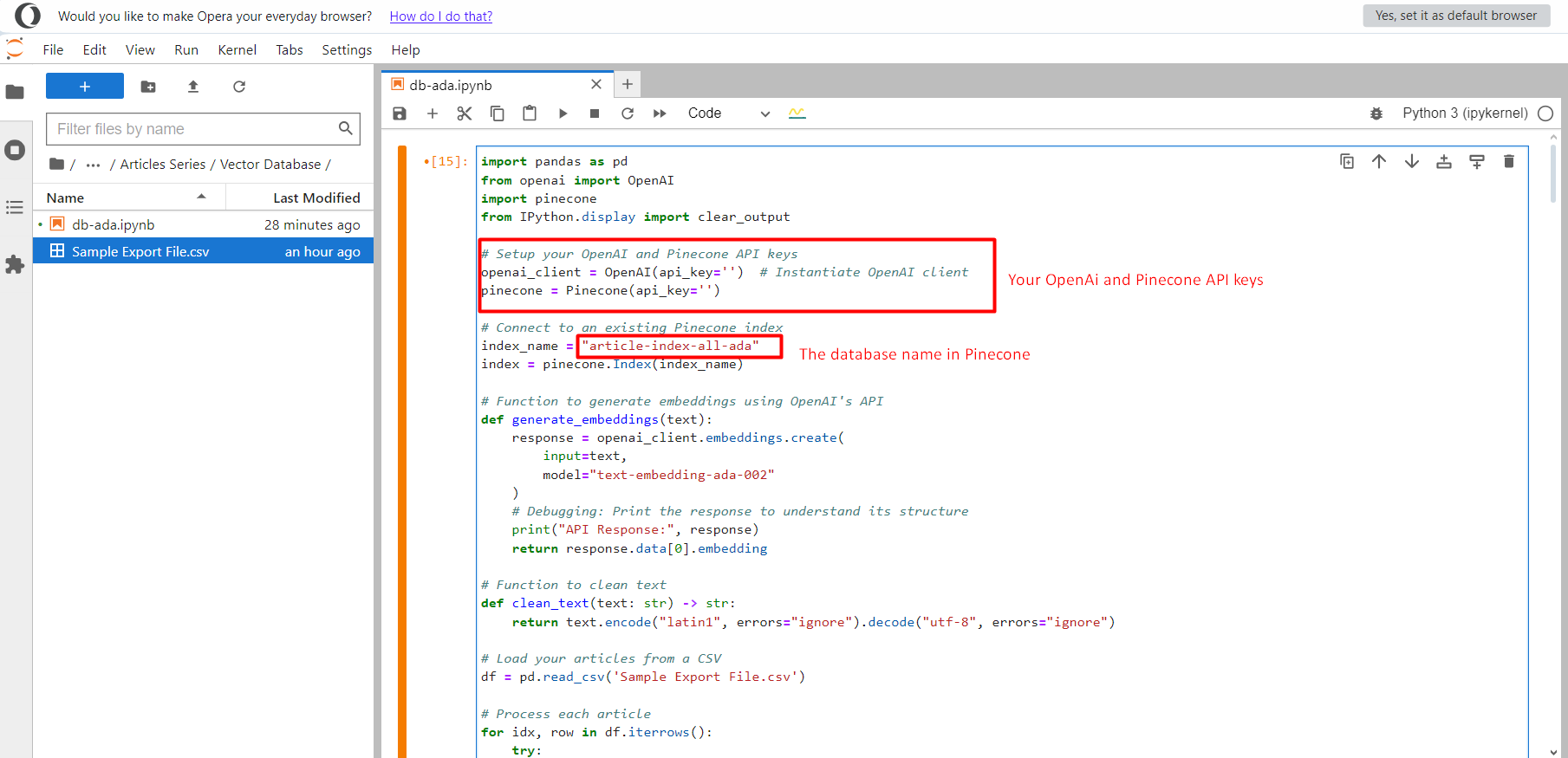

Assuming you already have an OpenAI API key, this code will generate vector embeddings from the text and insert them into the vector database in Pinecone.

import pandas as pd

from openai import OpenAI

from pinecone import Pinecone

from IPython.display import clear_output # Setup your OpenAI and Pinecone API keys

openai_client = OpenAI(api_key='YOUR_OPENAI_API_KEY') # Instantiate OpenAI client

pinecone = Pinecone(api_key='YOUR_PINECON_API_KEY') # Connect to an existing Pinecone index

index_name = "article-index-all-ada"

index = pinecone.Index(index_name) def generate_embeddings(text): """ Generates an embedding for the given text using OpenAI's API. Returns None if text is invalid or an error occurs. """ try: if not text or not isinstance(text, str): raise ValueError("Input text must be a non-empty string.") result = openai_client.embeddings.create( input=text, model="text-embedding-ada-002" ) clear_output(wait=True) # Clear output for a fresh display if hasattr(result, 'data') and len(result.data) > 0: print("API Response:", result) return result.data[0].embedding else: raise ValueError("Invalid response from the OpenAI API. No data returned.") except ValueError as ve: print(f"ValueError: {ve}") return None except Exception as e: print(f"An error occurred while generating embeddings: {e}") return None # Load your articles from a CSV

df = pd.read_csv('Sample Export File.csv') # Process each article

for idx, row in df.iterrows(): try: clear_output(wait=True) content = row["Content"] vector = generate_embeddings(content) if vector is None: print(f"Skipping article ID {row['ID']} due to empty or invalid embedding.") continue index.upsert(vectors=[ ( row['Permalink'], # Unique ID vector, # The embedding { 'title': row['Title'], 'category': row['Category'], 'type': row['Type'], 'publish_date': row['Publish Date'], 'publish_year': row['Publish Year'] } ) ]) except Exception as e: clear_output(wait=True) print(f"Error processing article ID {row['ID']}: {str(e)}") print("Embeddings are successfully stored in the vector database.")

You need to create a notebook file and copy and paste it in there, then upload the CSV file ‘Sample Export File.csv’ in the same folder.

Jupyter project.

Jupyter project.Once done, click on the Run button and it will start pushing all text embedding vectors into the index article-index-all-ada we created in the first step.

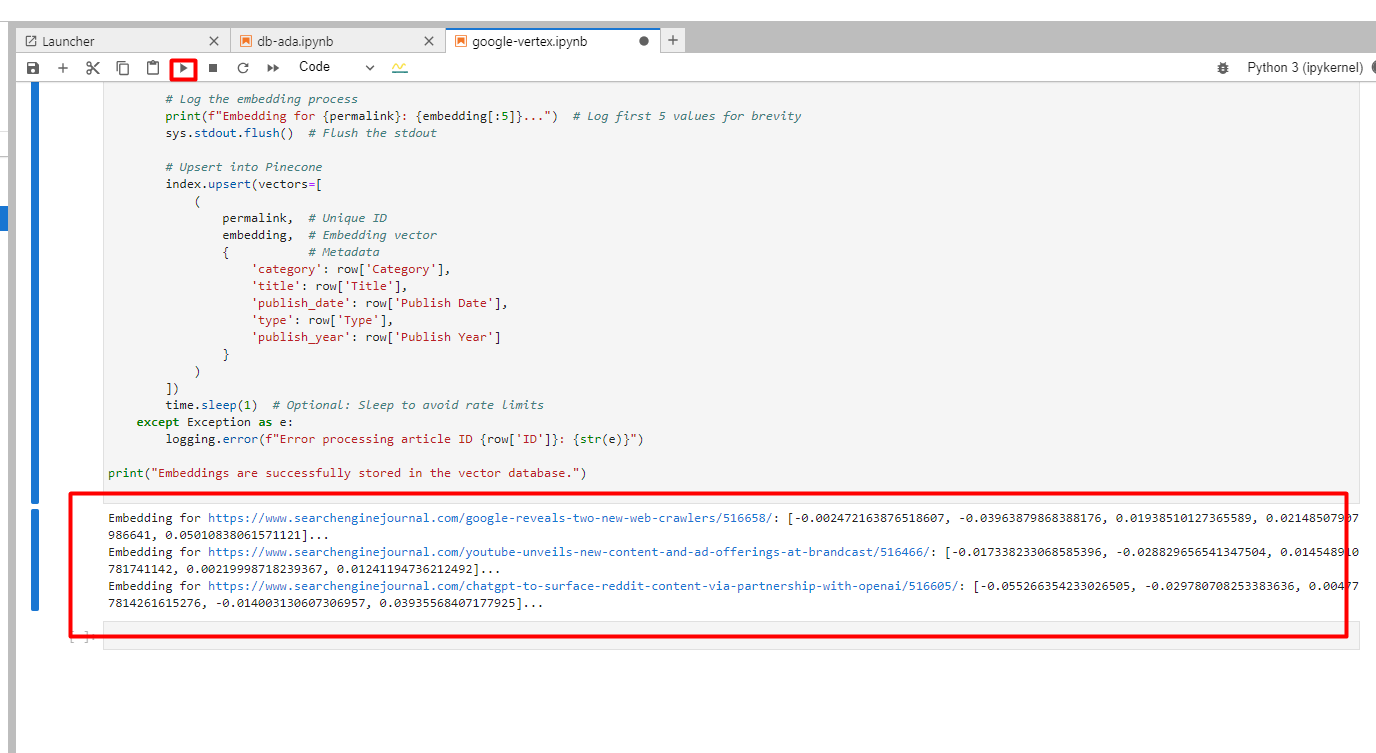

Running the script.

Running the script.You will see an output log text of embedding vectors. Once finished, it will show the message at the end that it was successfully finished. Now go and check your index in the Pinecone and you will see your records are there.

3. Finding An Article Match For A Keyword

Okay now, let’s try to find an article match for the Keyword.

Create a new notebook file and copy and paste this code.

from openai import OpenAI

from pinecone import Pinecone

from IPython.display import clear_output

from tabulate import tabulate # Import tabulate for table formatting # Setup your OpenAI and Pinecone API keys

openai_client = OpenAI(api_key='YOUR_OPENAI_API_KEY') # Instantiate OpenAI client

pinecone = Pinecone(api_key='YOUR_OPENAI_API_KEY') # Connect to an existing Pinecone index

index_name = "article-index-all-ada"

index = pinecone.Index(index_name) # Function to generate embeddings using OpenAI's API

def generate_embeddings(text): """ Generates an embedding for a given text using OpenAI's API. """ try: if not text or not isinstance(text, str): raise ValueError("Input text must be a non-empty string.") result = openai_client.embeddings.create( input=text, model="text-embedding-ada-002" ) # Debugging: Print the response to understand its structure clear_output(wait=True) #print("API Response:", result) if hasattr(result, 'data') and len(result.data) > 0: return result.data[0].embedding else: raise ValueError("Invalid response from the OpenAI API. No data returned.") except ValueError as ve: print(f"ValueError: {ve}") return None except Exception as e: print(f"An error occurred while generating embeddings: {e}") return None # Function to query the Pinecone index with keywords and metadata

def match_keywords_to_index(keywords): """ Matches a list of keywords to the closest article in the Pinecone index, filtering by metadata dynamically. """ results = [] for keyword_pair in keywords: try: clear_output(wait=True) # Extract the keyword and category from the sub-array keyword = keyword_pair[0] category = keyword_pair[1] # Generate embedding for the current keyword vector = generate_embeddings(keyword) if vector is None: print(f"Skipping keyword '{keyword}' due to embedding error.") continue # Query the Pinecone index for the closest vector with metadata filter query_results = index.query( vector=vector, # The embedding of the keyword top_k=1, # Retrieve only the closest match include_metadata=True, # Include metadata in the results filter={"category": category} # Filter results by metadata category dynamically ) # Store the closest match if query_results['matches']: closest_match = query_results['matches'][0] results.append({ 'Keyword': keyword, # The searched keyword 'Category': category, # The category used for filtering 'Match Score': f"{closest_match['score']:.2f}", # Similarity score (formatted to 2 decimal places) 'Title': closest_match['metadata'].get('title', 'N/A'), # Title of the article 'URL': closest_match['id'] # Using 'id' as the URL }) else: results.append({ 'Keyword': keyword, 'Category': category, 'Match Score': 'N/A', 'Title': 'No match found', 'URL': 'N/A' }) except Exception as e: clear_output(wait=True) print(f"Error processing keyword '{keyword}' with category '{category}': {e}") results.append({ 'Keyword': keyword, 'Category': category, 'Match Score': 'Error', 'Title': 'Error occurred', 'URL': 'N/A' }) return results # Example usage: Find matches for an array of keywords and categories

keywords = [["SEO Tools", "SEO"], ["TikTok", "TikTok"], ["SEO Consultant", "SEO"]] # Replace with your keywords and categories

matches = match_keywords_to_index(keywords) # Display the results in a table

print(tabulate(matches, headers="keys", tablefmt="fancy_grid"))

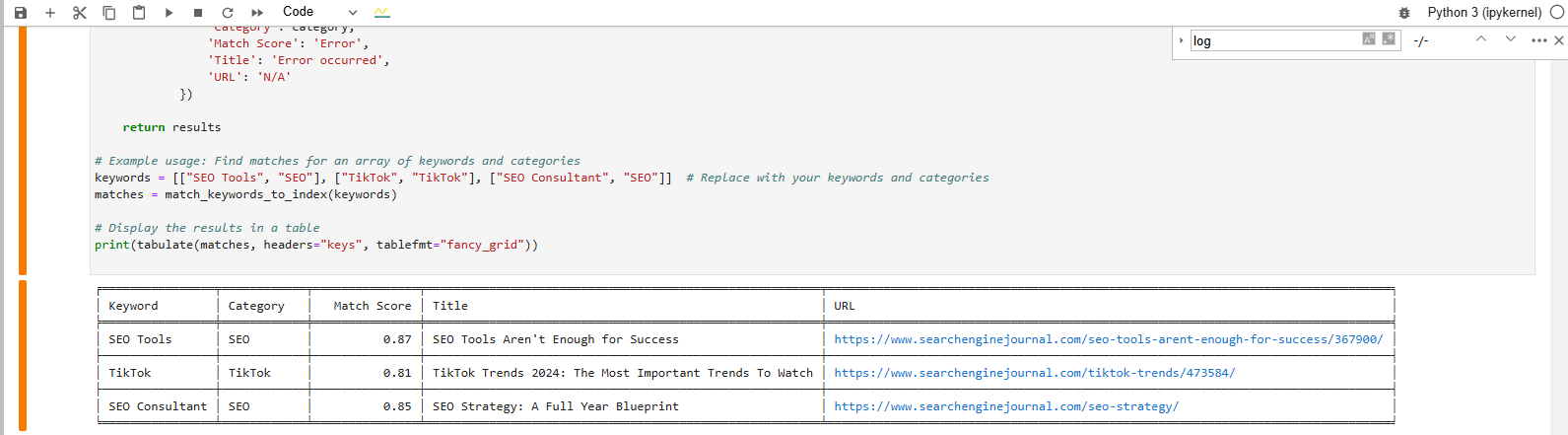

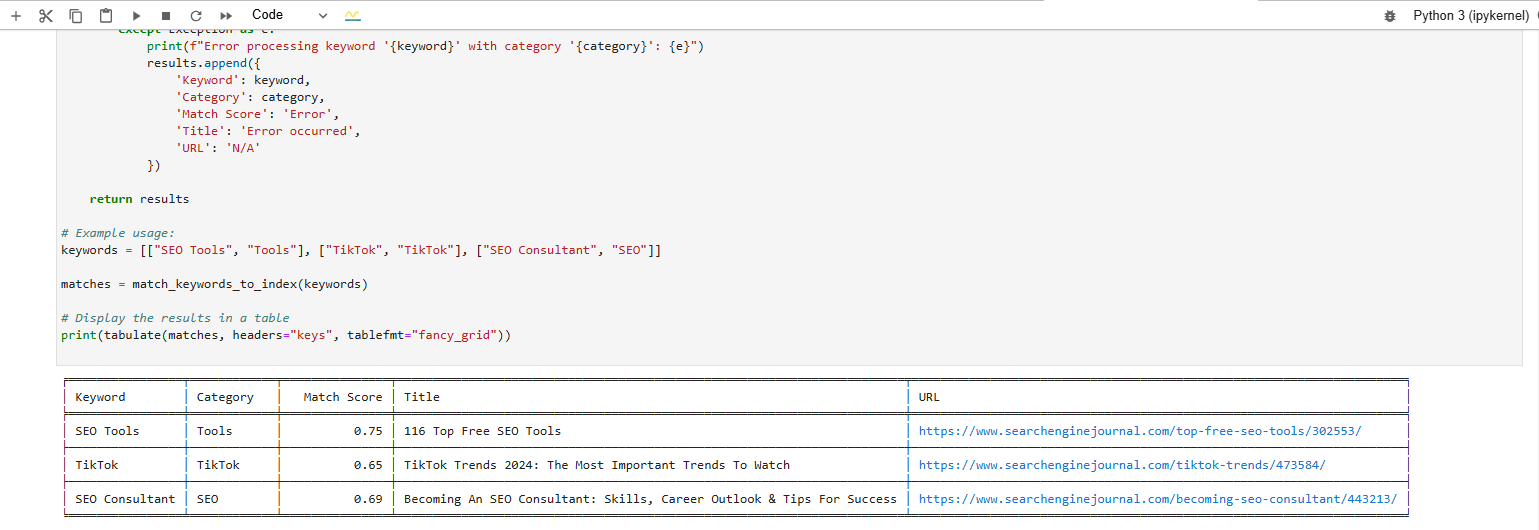

We’re trying to find a match for these keywords:

- SEO Tools.

- TikTok.

- SEO Consultant.

And this is the result we get after executing the code:

Find a match for the keyword phrase from vector database

Find a match for the keyword phrase from vector databaseThe table formatted output at the bottom shows the closest article matches to our keywords.

4. Inserting Google Vertex AI Text Embeddings Into The Vector Database

Now let’s do the same but with Google Vertex AI ‘text-embedding-005’embedding. This model is notable because it’s developed by Google, powers Vertex AI Search, and is specifically trained to handle retrieval and query-matching tasks, making it well-suited for our use case.

You can even build an internal search widget and add it to your website.

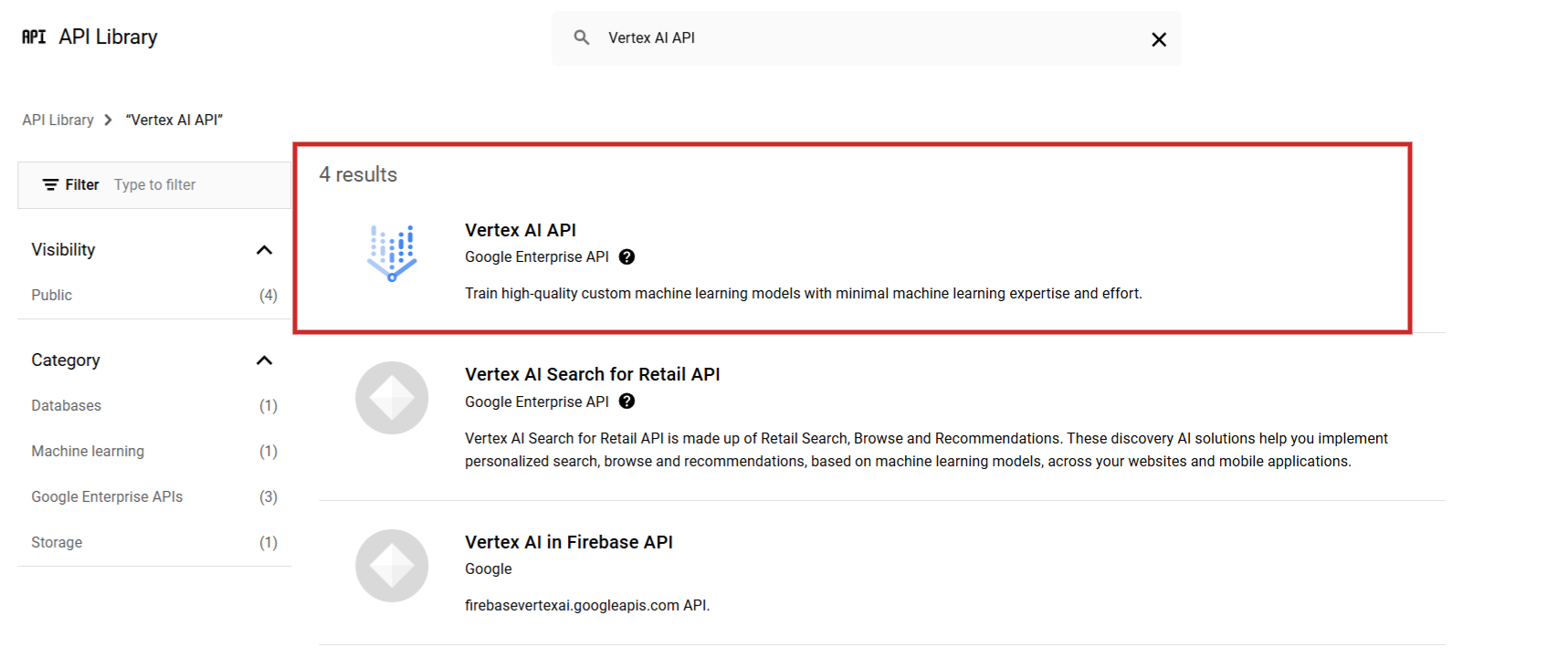

Start by signing in to Google Cloud Console and create a project. Then from the API library find Vertex AI API and enable it.

Screenshot from Google Cloud Console, December 2024

Screenshot from Google Cloud Console, December 2024Set up your billing account to be able to use Vertex AI as pricing is $0.0002 per 1,000 characters (and it offers $300 credits for new users).

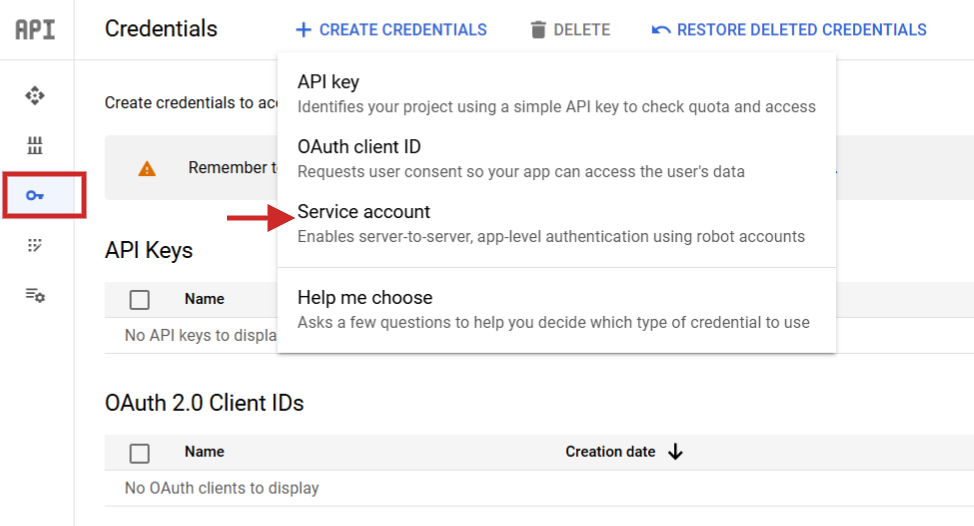

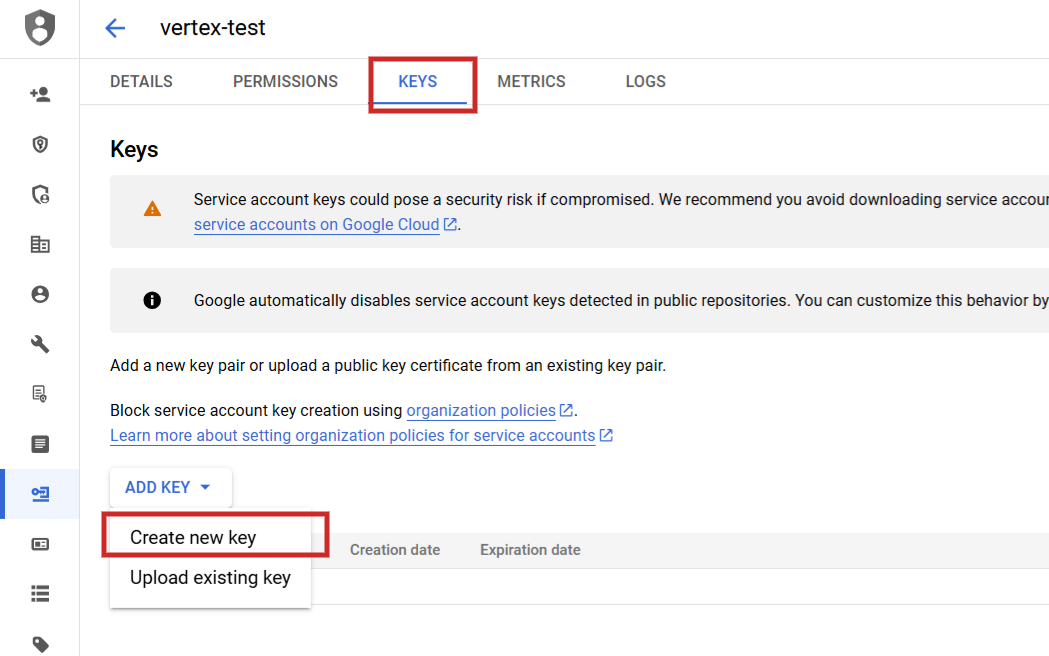

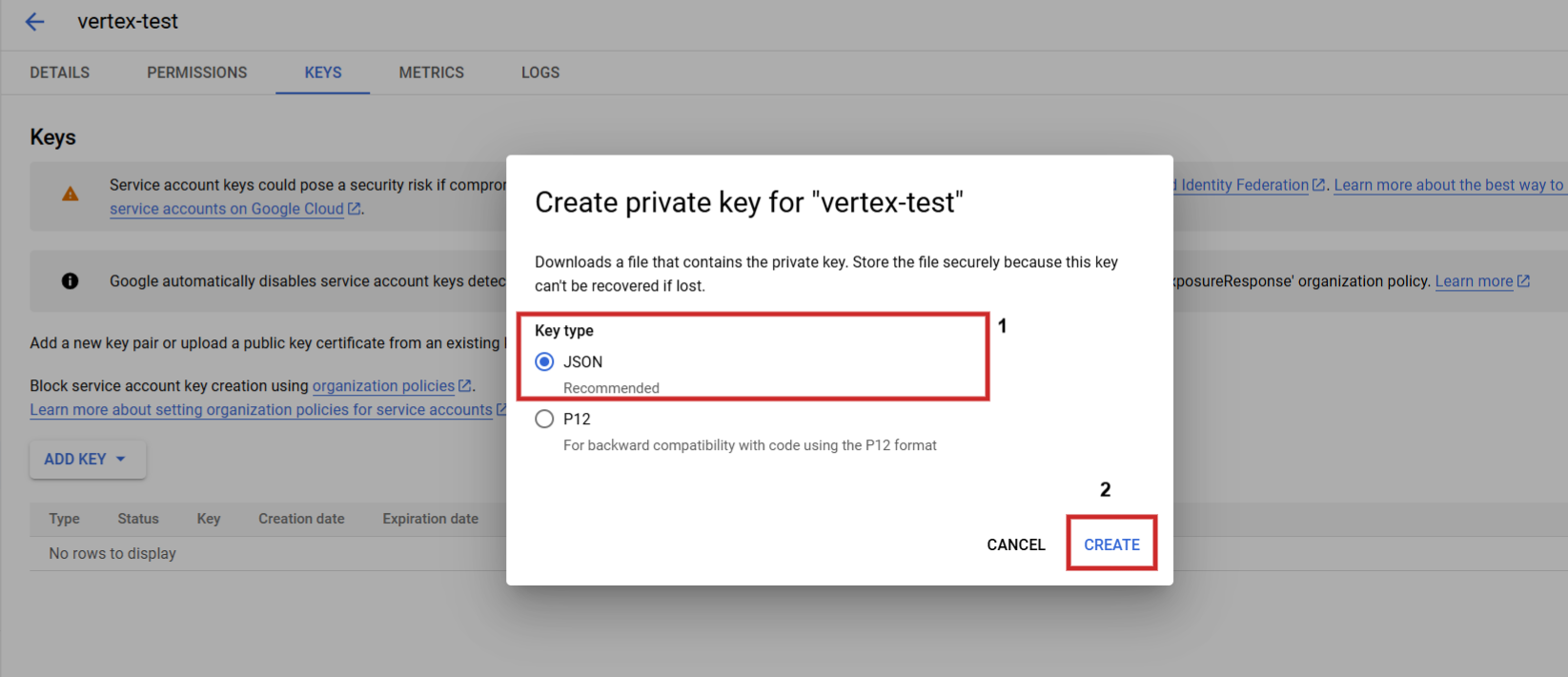

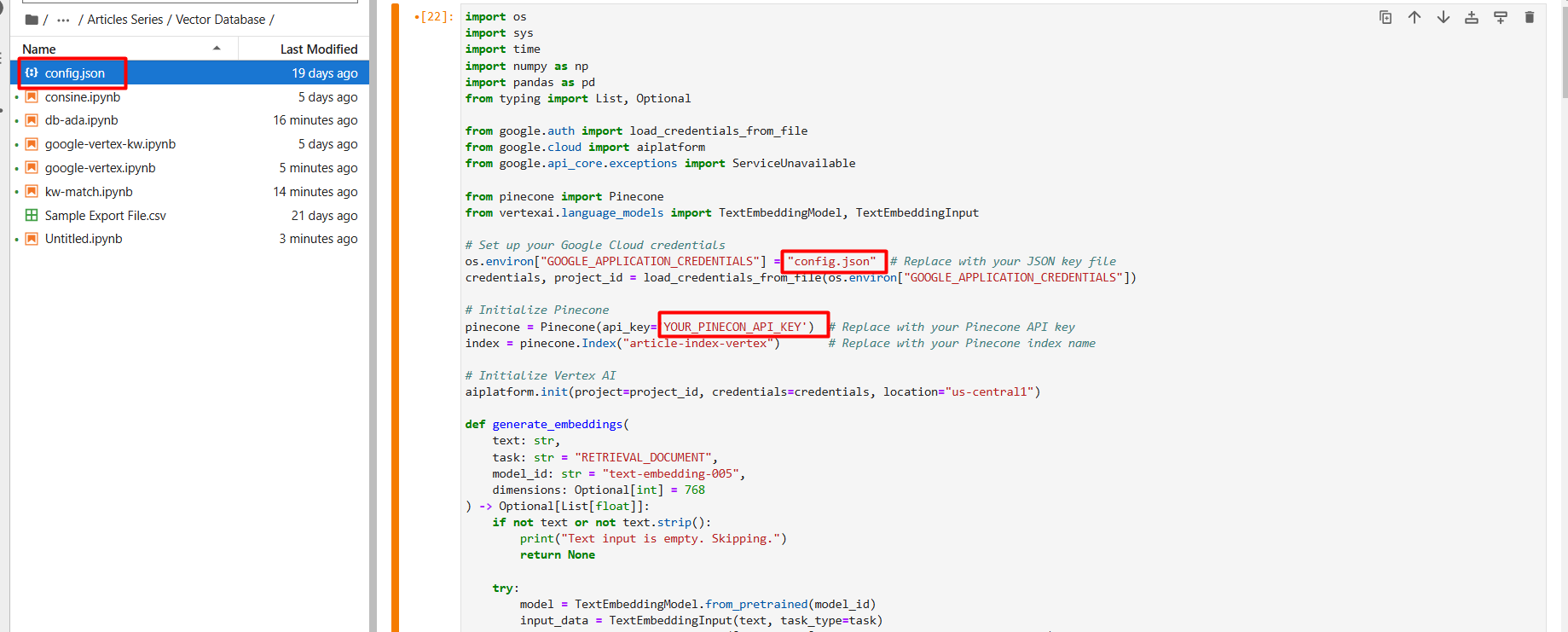

Once you set it, you need to navigate to API Services > Credentials create a service account, generate a key, and download them as JSON.

Rename the JSON file to config.json and upload it (via the arrow up icon) to your Jupyter Notebook project folder.

Screenshot from Google Cloud Console, December 2024

Screenshot from Google Cloud Console, December 2024In the setup first step, create a new vector database called article-index-vertex by setting dimension 768 manually.

Once created you can run this script to start generating vector embeddings from the the same sample file using Google Vertex AI text-embedding-005 model (you can choose text-multilingual-embedding-002 if you have non-English text).

import os

import sys

import time

import numpy as np

import pandas as pd

from typing import List, Optional from google.auth import load_credentials_from_file

from google.cloud import aiplatform

from google.api_core.exceptions import ServiceUnavailable from pinecone import Pinecone

from vertexai.language_models import TextEmbeddingModel, TextEmbeddingInput # Set up your Google Cloud credentials

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = "config.json" # Replace with your JSON key file

credentials, project_id = load_credentials_from_file(os.environ["GOOGLE_APPLICATION_CREDENTIALS"]) # Initialize Pinecone

pinecone = Pinecone(api_key='YOUR_PINECON_API_KEY') # Replace with your Pinecone API key

index = pinecone.Index("article-index-vertex") # Replace with your Pinecone index name # Initialize Vertex AI

aiplatform.init(project=project_id, credentials=credentials, location="us-central1") def generate_embeddings( text: str, task: str = "RETRIEVAL_DOCUMENT", model_id: str = "text-embedding-005", dimensions: Optional[int] = 768

) -> Optional[List[float]]: if not text or not text.strip(): print("Text input is empty. Skipping.") return None try: model = TextEmbeddingModel.from_pretrained(model_id) input_data = TextEmbeddingInput(text, task_type=task) vectors = model.get_embeddings([input_data], output_dimensionality=dimensions) return vectors[0].values except ServiceUnavailable as e: print(f"Vertex AI service is unavailable: {e}") return None except Exception as e: print(f"Error generating embeddings: {e}") return None # Load data from CSV

data = pd.read_csv("Sample Export File.csv") # Replace with your CSV file path for idx, row in data.iterrows(): try: permalink = str(row["Permalink"]) content = row["Content"] embedding = generate_embeddings(content) if not embedding: print(f"Skipping article ID {row['ID']} due to empty or failed embedding.") continue print(f"Embedding for {permalink}: {embedding[:5]}...") sys.stdout.flush() index.upsert(vectors=[ ( permalink, embedding, { 'category': row['Category'], 'title': row['Title'], 'publish_date': row['Publish Date'], 'type': row['Type'], 'publish_year': row['Publish Year'] } ) ]) time.sleep(1) # Optional: Sleep to avoid rate limits except Exception as e: print(f"Error processing article ID {row['ID']}: {e}") print("All embeddings are stored in the vector database.") You will see below in logs of created embeddings.

Screenshot from Google Cloud Console, December 2024

Screenshot from Google Cloud Console, December 20244. Finding An Article Match For A Keyword Using Google Vertex AI

Now, let’s do the same keyword matching with Vertex AI. There is a small nuance as you need to use ‘RETRIEVAL_QUERY’ vs. ‘RETRIEVAL_DOCUMENT’ as an argument when generating embeddings of keywords as we are trying to perform a search for an article (aka document) that best matches our phrase.

Task types are one of the important advantages that Vertex AI has over OpenAI’s models.

It ensures that the embeddings capture the intent of the keywords which is important for internal linking, and improves the relevance and accuracy of the matches found in your vector database.

Use this script for matching the keywords to vectors.

import os

import pandas as pd

from google.cloud import aiplatform

from google.auth import load_credentials_from_file

from google.api_core.exceptions import ServiceUnavailable

from vertexai.language_models import TextEmbeddingModel from pinecone import Pinecone

from tabulate import tabulate # For table formatting # Set up your Google Cloud credentials

os.environ["GOOGLE_APPLICATION_CREDENTIALS"] = "config.json" # Replace with your JSON key file

credentials, project_id = load_credentials_from_file(os.environ["GOOGLE_APPLICATION_CREDENTIALS"]) # Initialize Pinecone client

pinecone = Pinecone(api_key='YOUR_PINECON_API_KEY') # Add your Pinecone API key

index_name = "article-index-vertex" # Replace with your Pinecone index name

index = pinecone.Index(index_name) # Initialize Vertex AI

aiplatform.init(project=project_id, credentials=credentials, location="us-central1") def generate_embeddings( text: str, model_id: str = "text-embedding-005"

) -> list: """ Generates embeddings for the input text using Google Vertex AI's embedding model. Returns None if text is empty or an error occurs. """ if not text or not text.strip(): print("Text input is empty. Skipping.") return None try: model = TextEmbeddingModel.from_pretrained(model_id) vector = model.get_embeddings([text]) # Removed 'task_type' and 'output_dimensionality' return vector[0].values except ServiceUnavailable as e: print(f"Vertex AI service is unavailable: {e}") return None except Exception as e: print(f"Error generating embeddings: {e}") return None def match_keywords_to_index(keywords): """ Matches a list of keyword-category pairs to the closest articles in the Pinecone index, filtering by metadata if specified. """ results = [] for keyword_pair in keywords: keyword = keyword_pair[0] category = keyword_pair[1] try: keyword_vector = generate_embeddings(keyword) if not keyword_vector: print(f"No embedding generated for keyword '{keyword}' in category '{category}'.") results.append({ 'Keyword': keyword, 'Category': category, 'Match Score': 'Error/Empty', 'Title': 'No match', 'URL': 'N/A' }) continue query_results = index.query( vector=keyword_vector, top_k=1, include_metadata=True, filter={"category": category} ) if query_results['matches']: closest_match = query_results['matches'][0] results.append({ 'Keyword': keyword, 'Category': category, 'Match Score': f"{closest_match['score']:.2f}", 'Title': closest_match['metadata'].get('title', 'N/A'), 'URL': closest_match['id'] }) else: results.append({ 'Keyword': keyword, 'Category': category, 'Match Score': 'N/A', 'Title': 'No match found', 'URL': 'N/A' }) except Exception as e: print(f"Error processing keyword '{keyword}' with category '{category}': {e}") results.append({ 'Keyword': keyword, 'Category': category, 'Match Score': 'Error', 'Title': 'Error occurred', 'URL': 'N/A' }) return results # Example usage: keywords = [["SEO Tools", "Tools"], ["TikTok", "TikTok"], ["SEO Consultant", "SEO"]] matches = match_keywords_to_index(keywords) # Display the results in a table

print(tabulate(matches, headers="keys", tablefmt="fancy_grid")) And you will see scores generated:

Keyword Matche Scores produced by Vertex AI text embedding model

Keyword Matche Scores produced by Vertex AI text embedding modelTry Testing The Relevance Of Your Article Writing

Think of this as a simplified (broad) way to check how semantically similar your writing is to the head keyword. Create a vector embedding of your head keyword and entire article content via Google’s Vertex AI and calculate a cosine similarity.

If your text is too long you may need to consider implementing chunking strategies.

A close score (cosine similarity) to 1.0 (like 0.8 or 0.7) means you’re pretty close on that subject. If your score is lower you may find that an excessively long intro which has a lot of fluff may be causing dilution of the relevance and cutting it helps to increase it.

But remember, any edits made should make sense from an editorial and user experience perspective as well.

You can even do a quick comparison by embedding a competitor’s high-ranking content and seeing how you stack up.

Doing this helps you to more accurately align your content with the target subject, which may help you rank better.

There are already tools that perform such tasks, but learning these skills means you can take a customized approach tailored to your needs—and, of course, to do it for free.

Experimenting for yourself and learning these skills will help you to keep ahead with AI SEO and to make informed decisions.

As additional readings, I recommend you dive into these great articles:

More resources:

Featured Image: Aozorastock/Shutterstock