When I first got into search marketing (back in 2005), there used to be a Tumblr feed dedicated to poorly set up campaigns that clearly did not have quality assurance (aka QA) checks done.

Dynamic keyword insertion and general broad match were the two fastest ways to end up on that page and one of the quickest ways to ruin your day (possibly even your job or career).

Now, nearly 20 years later, platforms have evolved (or devolved depending on the platform and unit), and the need for proper pre- and post-launch QAs has never been more important.

But with that being said, some operations are still learning the hard way what they did and didn’t remember to check. This goes beyond basic paid search and onto all paid media (search, shopping, PMax, YouTube/video, GDN, and even social and programmatic platforms).

This often leads to competition finding those mistakes (it is a mistake if you don’t QA) and exploiting it for their own gain.

Full transparency: I do it as well. If I find a brand or another agency making a mistake in their work and can exploit it, I absolutely will.

Yes, in the land of digital marketing, especially when it comes to taking down the competition (not as practical to execute, but if possible, so valuable to you), I will wake up and choose violence. Nearly 20 years in digital marketing will do that to a person (especially if six months of the year they have to watch the NY Jets blow it again).

Everyone makes mistakes sometimes in digital marketing, even me. The key is to make sure the person responsible for running the ad campaign knows what is happening, from pre-launch to the live campaign itself.

Let’s delve into some mistakes that have been found, explain how they could’ve been prevented through a standard ongoing QA process, and what you should do in the future to CYA (if you don’t know that acronym, go look it up on Bing).

Some Notable Mistakes

Author Disclaimer: There are literally oodles of different mistakes going on all around us. I will only note the small and large ones I’ve witnessed. For some legal reasons, some brands that were the self-induced victims of these mistakes will be anonymized.

In 2011, I was working for a major holding company ad agency, running media for a credit card company that was trying to sponsor a holiday they created that transpired just after Thanksgiving that encouraged shopping at local, non-large businesses (you can guess who).

My team was short-staffed; Reps for a major search engine with a major video platform (considered the second-largest search engine in the country) offered to assist my team with the video part by running it for us.

We gladly obliged for the help and gave them our targeted keyword and category list for the campaign, and then we gave them the negative keyword and category list. The reps told us they would run it for us, QA it for us, and give us the results.

This was a mistake on our part.

The campaign ran for two weeks, spending around $100,000. But when we got the results from the reps, they were terrible. The video had an incredibly low view rate, a higher-than-normal cost per view, and almost no clicks to the website (we knew it wouldn’t get many, but to get less than 100 from 5+ million impressions was odd).

We got our hands on the data and the settings (it was operated in an account we didn’t initially have access to) and discovered the category target list was missing, the keyword targets had been used as the negative keyword list, and worse, the excluded categories and negative keywords had been used as the targets.

Let’s just say the keywords we pulled from Urban Dictionary around intimacy triggered a concerningly high volume of impressions on a variety of non-brand-safe content.

The rep of that major search engine was informed of the faux pas, and they admitted a minimal prelaunch QA had been done, but not thoroughly – and a post-launch QA was never done.

This resulted in a $150,000 credit (keep in mind we only spent $100,000) back to the credit card company. We never saw or heard from that sales rep again.

In 2019, my agency won some new business for a conglomerate of sports nutrition brands. During the kick-off with the brand, they showed us their YouTube data, which was incredibly impressive in terms of non-skippable video.

We’re talking 45+ second videos, with a view rate exceeding 75% (the industry benchmark was 35%) when they reached the 30-second mark and a cost per view (CPV) of less than $0.04.

They informed us that despite the great metrics, there was little to no evidence of direct or down-funnel sales, and they considered the effort a complete failure. Something didn’t make sense, and they asked me to audit the prior agency’s work.

What I found was concerning.

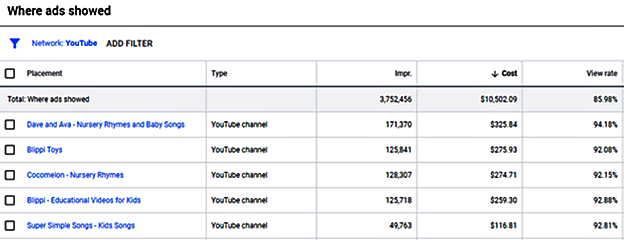

This brand ran video ads featuring incredibly muscular people wearing next to nothing exercising like they were training for the Hunger Games. The campaign spent around $500,000 over a six-month period.

Upon digging in, I realized there was no content targeting, no age targeting, and absolutely nothing in exclusions.

After writing up an analysis that took an estimated 120 hours to complete, it was determined that 60% of ad spend for these scantily clad adults drinking pre-workout and protein shakes had been shown on children’s content, such as Blues Clues, Coco Melon, Blippi, and any parent’s holy nightmare: Caillou.

The view rate and cost per view were so impressive but generated no sales because the majority of the impressions and views were being served to children ages two to seven.

The prior agency had failed to do a full pre-launch QA, post-launch QA, or even check the data during the flight. This was all taken into consideration, and the brand took the prior agency to court to recover six months of agency fees and media spend (this was settled out of court in the mid-six-figure range).

Screenshot from author, April 2024

Screenshot from author, April 2024Don’t show your workout ads to kids!

Also, if you’re doing retargeting of any sort, know where it shows and has negatives!

-

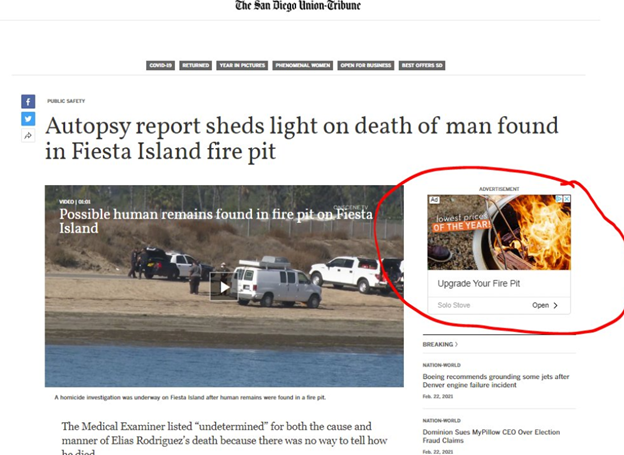

Image from The San Diego Union-Tribune, April 2024

Image from The San Diego Union-Tribune, April 2024

Remarketing is great when you’re prepared for it. A more recent scenario I ran into (in 2024) is a brand I have never worked with, but after finding the same mistake three times in six weeks, it is time to call it out.

I’m sorry, Darden Foods digital team. I enjoy the breadsticks at Olive Garden, but this is a simple fix that you still haven’t done.

Recently, on a trip home from skiing, my wife saw a sign for Olive Garden and insisted we pull in for lunch, as she hadn’t been to one in 15 years.

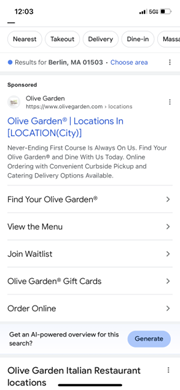

We pulled in, ate, and wondered if there was one near our home for future visits. I pulled out my phone at the table (yes, quite rude, but justified), and searched for [Olive Garden Locations], and got this:

-

Screenshot of search for [Olive Garden], Google, February 2024

Screenshot of search for [Olive Garden], Google, February 2024

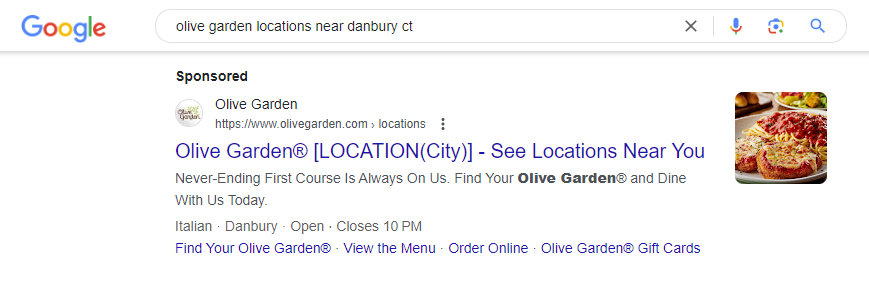

Here again, six weeks later. I mean, come on.

-

Screenshot of search for [Olive Garden], Google, March 2024

Screenshot of search for [Olive Garden], Google, March 2024

Here, we have a dynamic location insertion put into a search ad (which is normally a great thing to have when set up correctly).

But during the setup, instead of using {}, they used [].

Therefore, it cannot trigger the location; it only triggers [Location(City)], delivering the consumer a poor user experience and not indicating whether or not a location is nearby.

I repeated this search multiple times over six weeks to realize the advertiser never discovered it. I suspect this was uploaded through a bulk sheet, as a manual insertion into the UI, or even the editor has an obvious callout if it is implemented correctly.

Easily Overlooked Future Mistakes

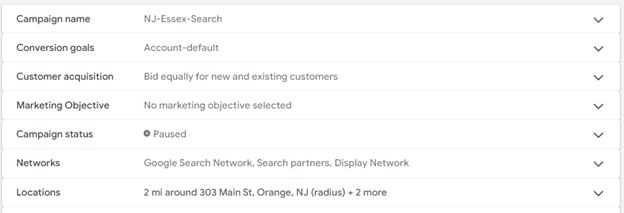

A very common mistake that can be prevented pre-launch but can easily be caught post-launch is one that has been around essentially since the beginning of the industry and lives in both Google Adwords and Bing Ads (it’ll be a cold day in hell before I ever call them Google Ads and Microsoft) and even in Facebook/Instagram (refusing to call it Meta): default settings.

When you first create search campaigns in Google and Bing, some settings are automatically presented to you in a certain way, and you, as an advertiser, must proactively change them (any seasoned search marketer knows this, so this issue is more common with SMBs).

These default search settings include but are not limited to:

- Auto-Apply recommendations on (Google and Bing specific).

- Dynamic extensions on (Google and Bing specific).

- Advantage+ on (Facebook/Instagram specific).

- Display Network/Audience Network on (Google and Facebook/Instagram specific, Bing did away with the ability to opt out of their network a couple of years ago).

- Search Partner/Search Syndication Networks (Google and Bing specific).

- Mobile app placement (Google Display Network specific).

- Broad match keywords (Google and Bing specific when you add keywords without a specified match type).

-

Screenshot from author, April 2024

Screenshot from author, April 2024 - Google is gonna Google to make that bread off those not paying attention.

And that is just the tip of the iceberg. Just because you don’t have the ideal or approved assets to put in these places doesn’t mean you won’t be accruing traffic and spend here.

Unless you plan to have them enabled, they need to be changed.

Needless to say, in each of these scenarios, pre-, post, and ongoing QA efforts can prevent some of these catastrophes from happening.

I should note that efforts tied to Performance Max, demand generation, and Advantage+ are a bit harder to QA.

But not QAing them is like telling Jenn Shah of RHOSLC that you trust her customer CRM lists are safe and legitimate.

QA To Save the Day

Now that the fear of digital marketing God is in you, let’s calm you down and discuss how not to have a terrible day with the CMO who has seen your ads live.

This will give them and you more confidence and prevent a conversation more painful than the time I put my head in a snowblower (per editor’s request, a photo of that is not included).

There are 3 phases of a QA plan: pre-launch, post-launch, and ongoing (spoiler, the third phase is ongoing, in perpetuity, but is just part pf your basic optimization strategy).

- Pre-launch: A standardized checklist that you go through for all settings to make sure elements are set before launch. Includes targeting, exclusions, budgeting, assets, etc.

- Post-launch: This is very similar to the pre-launch list, but it includes analysis of initial data to look for anything out of place, such as queries you map to, sites you trigger on, networks, disapproved assets, etc. This should be done somewhere between 24 and 72 hours of launch, after accruing data

- Ongoing: This ties directly to your ongoing optimization but is next to it. Think of it as an ongoing post-launch checklist that is repeated at intervals of once a month. This isn’t a formal optimization document or analysis but an ongoing settings check.

The Takeaway

If you’ve read my articles before, you recognize that this isn’t the first time I’ve written about something like this.

However, I’ve witnessed operations/individuals not follow QA protocols, even the most basic ones. Once a mistake is noticed internally and not rectified, it can go up the chain fast and can be as bad as losing one’s job.

But if the general public catches a mistake and calls you out on it, well, an apology press conference and campaign can cost the operation tens of thousands of dollars.

A simple ongoing checklist for the life of the campaign will save you a lot of pain and suffering later. It is part of any solid optimization strategy, so it’s not like you aren’t already doing it.

If you need inspiration on what one should look like, feel free to reach out to me, and I can get you in the right direction.

More resources:

Featured Image: PKStockphoto/Shutterstock